My Other Publications: The Material from the Miraculous (Video Essay).

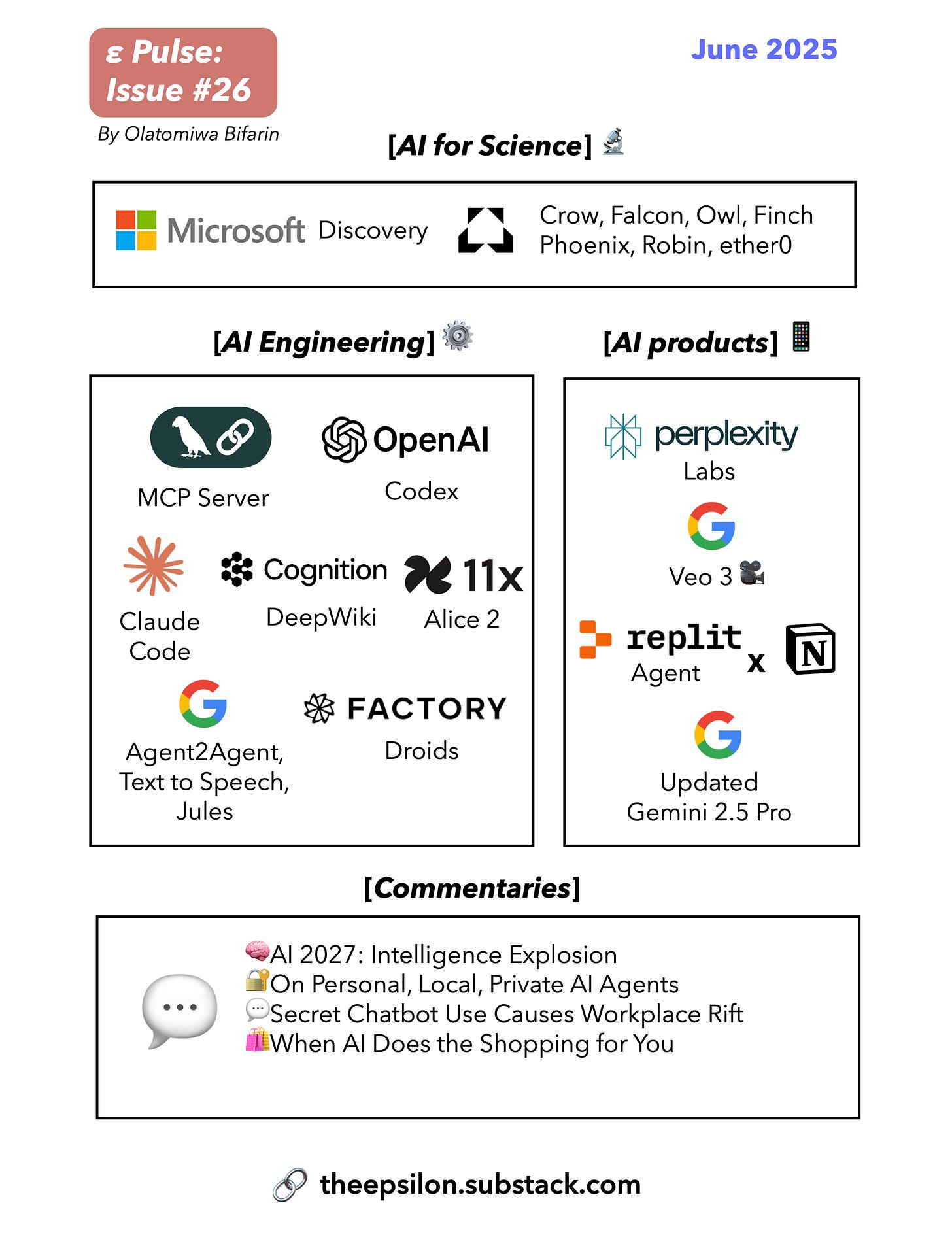

[LLM/AI for Science] 🤖 🦠 🧬

[I]: Microsoft Discovery

At Microsoft Build 2025, Microsoft Discovery was introduced, a groundbreaking agentic AI platform for R&D. The tools empower scientists and engineers by providing them with a suite of specialized AI agents and a graph-based knowledge engine. Built on Microsoft Azure, Discovery is designed to accelerate scientific outcomes, enabling researchers to innovate with greater speed, scale, and accuracy than ever before.

Microsoft Discovery's extensibility, they say, is a key feature, enabling the integration of proprietary models and data with Microsoft's cutting-edge innovations and open-source solutions. As a powerful example of its capabilities, they demoed the discovery of a new coolant prototype for data centers.

“At Microsoft, our researchers have leveraged the advanced AI models and high-performance computing (HPC) simulation tools in Microsoft Discovery to discover a novel coolant prototype with promising properties for immersion cooling in datacenters in about 200 hours—a process that otherwise would have taken months, if not years.”

[II]: Future House Platform

I have covered Future House several works on my newsletter, and in fact, I would argue that they are the most frequent entries in my AI for Science section. That is largely because they are one of the firsts that essentially started this AI Scientists initiative. (Future House is a nonprofit initiative supported by Eric Schmidt.) Their platform was recently released to the public.

The FutureHouse Platform is an ecosystem of AI agents designed to accelerate scientific discovery by addressing the overwhelming volume of scientific literature and data that researchers face. Launched to democratize access to advanced scientific tools, the platform features four publicly available specialized agents—Crow, Falcon, Owl, and Phoenix—each tailored to streamline distinct aspects of the research process.

Crow serves as a general-purpose agent, excelling in searching scientific literature and providing concise, scholarly answers by analyzing full-text papers. Falcon specializes in deep literature reviews (think deep research), leveraging access to specialized databases like OpenTargets (for disease-target associations), it’s ideal for meta-analyses and identifying contradictions in research. Owl, previously known as HasAnyone, focuses on novelty assessment, conducting comprehensive precedent searches to help researchers avoid redundant work and pinpoint unexplored areas. Phoenix, an experimental agent, supports chemical informatics, connecting to chemistry databases to aid in molecular prediction and synthesis for new molecules. To my knowledge, they have an additional agent that is currently in beta (which I have participated in) called Finch, for data analysis. This automates important bioinformatics tasks, and I can only say good things about it from my use cases.

Additionally, FutureHouse introduced Robin, a multi-agent system to automate the entire scientific process, from hypothesis generation to experimental validation. I have not read the Robin paper but I plan to and if I can make the time to write an essay on the recent developments in the field of automating science with LLM agents, I will discuss the paper. Also, as I was writing my last sentence, I just looked up my X feed and the first post I saw (after Elon’s) was that Future House has released their first scientific reasoning model, ether0 that can be reason over chemistry tasks. I would include all these in my proposed essay if I eventually end up writing one.

[AI/LLM Engineering] 🤖🖥⚙

[I]: LangGraph Agents Exposed as MCP Servers.

LangGraph Platform now supports MCP, an open standard designed to simplify how LLMs connect with external tools and data. This integration means that LangGraph agents can be easily deployed and are immediately available as MCP tools via a streamable HTTP endpoint, complete with built-in security and observability through Langsmith. A demonstration showcases the ease of using these agents as tools within MCP-compatible clients, like Claude desktop, and even highlights the potential for multi-agent collaboration, ultimately offering developers a simplified way to deploy, connect, and scale their AI agent capabilities.

[II]: Agent2Agent Protocol

In a significant move towards a more collaborative and interconnected AI landscape, in April, Google unveiled the Agent2Agent (A2A) protocol, an open standard designed to enable collaboration between AI agents, regardless of their developer or underlying platform. The initiative aims to break down the silos that currently exist between different AI agents. The A2A protocol will allow agents to securely exchange information, delegate tasks, and coordinate complex workflows. This development is poised to unlock new possibilities for automation, where specialized agents can work in concert to tackle multi-step processes, such as a hiring workflow where different agents handle candidate sourcing, scheduling, and communication. See Demo below

The core of the A2A protocol is built on the principles of discoverability, interoperability, and asynchronicity, allowing agents to find each other, communicate effectively, and manage long-running tasks without direct oversight. By providing a common language for interaction, Google and its partners envision a future where users can assemble teams of AI agents tailored to their specific needs. Here is the GitHub page and Documentation. Here is a nice video explaining A2A.

“A2A (Agent2Agent Protocol): Facilitates dynamic, multimodal communication between different agents as peers. It's how agents collaborate, delegate, and manage shared tasks.”

[III]: Building and Evaluating AI Agents

Here, Sayash Kapoor gave a talk about the optimistic hype surrounding AI agents and contrasts it with the significant hurdles that are yet to be overcome in their development and evaluation. While rudimentary agents like ChatGPT reasoning models and Claude (with tools) have shown some success, the more ambitious and capable autonomous agents that have been envisioned are still a distant reality, with many real-world attempts failing to meet expectations. The core of the problem, as Kapoor explains, lies in the inherent difficulty of evaluating these agents, citing examples of companies making unsubstantiated claims and the prevalent issue of AI hallucination in specialized fields.

Kapoor outlines several key challenges in the evaluation process, emphasizing that traditional static benchmarks used for language models are insufficient for agents that interact with the complexities of the real world. This can lead to a skewed perception of an agent's true capabilities. He further distinguishes between an AI's capability and its reliability, arguing that while a model may demonstrate impressive skills, it often lacks the consistency required for dependable real-world application. To address this, Kapoor advocates for a paradigm shift in AI engineering, urging the adoption of a reliability engineering mindset to bridge the gap between what an AI can do and what it can be trusted to do, ensuring that the end products are both effective and dependable for users.

[IV]: Gemini Text to Speech

“The Gemini API can transform text input into single speaker or multi-speaker audio using native text-to-speech (TTS) generation capabilities. Text-to-speech (TTS) generation is controllable, meaning you can use natural language to structure interactions and guide the style, accent, pace, and tone of the audio.

The TTS capability differs from speech generation provided through the Live API, which is designed for interactive, unstructured audio, and multimodal inputs and outputs. While the Live API excels in dynamic conversational contexts, TTS through the Gemini API is tailored for scenarios that require exact text recitation with fine-grained control over style and sound, such as podcast or audiobook generation.”

Link. A tutorial walk-through.

[V]: 11x: Building and Scaling an AI Agent

This is a good, high level talk on agent engineering, focused on architecture. It's by folks from 11x at "Interrupt", an AI agent conference by LangChain AI. They explain the limitations of the original Alice (their digital worker), which included manual input, basic research, and no self-learning capabilities. This led to the development of Alice 2, envisioned as a more advanced agent with a chat interface, deep research abilities, and automatic replies.

The presentation covers the technical challenges and architectural decisions made during the 3-month development process. They explored and moved beyond ReAct and Workflow architectures, ultimately settling on a more flexible and high-performing multi-agent system. See a demo of their product.

[VI]: SWE Agents: Codex, Jules, Claude Code, Factory, Deep Wiki

There has been a big surge of software engineering agents from the top tech companies (and little tech too). I wanted to initially dedicate a Pulse post to it, but time hasn’t been a friend (hasn’t always been), so I will just run this short abstract on Codex from OpenAI and link to several tutorials I watched, such an interesting time we live in.

OpenAI Codex :

“Codex: a cloud-based software engineering agent that can work on many tasks in parallel. Codex can perform tasks for you such as writing features, answering questions about your codebase, fixing bugs, and proposing pull requests for review; each task runs in its own cloud sandbox environment, preloaded with your repository. Codex is powered by codex-1, a version of OpenAI o3 optimized for software engineering. It was trained using reinforcement learning on real-world coding tasks in a variety of environments to generate code that closely mirrors human style and PR preferences, adheres precisely to instructions, and can iteratively run tests until it receives a passing result.”

Blog, Demo, Tweet, Fireship, Latent Space, Codex tutorial, Codex CLI tutorial.

As I stated earlier, there is more: OpenAI Codex CLI vs. Claude Code, Love letter to Claude Code, Google Jules. For something more comprehensive, especially for teams of human SWE, see this tutorial about Factory.

And then there is Deepwiki. Deepwiki is not a SWE per se, but it's actually a very powerful AI system that allows you to ask questions over GitHub repositories. It's really powerful, and all you have to do is just to replace the GitHub in the URL of the repository with DeepWiki. See Demo. I am definitely using this one.

[AI X Industry + Products] 🤖🖥👨🏿💻

[I]: Veo 3: Video Generation

You have probably seen some new trend of AI videos out there on social media with these 8 seconds time frame, or longer versions of 8 sec clips stitched together. It’s from Google’s Veo 3 which is a huge upgrade for video generation. And I cannot help but think that sitting on a pile of YouTube videos didn’t hurt.

Here is the first one I generated, Boethius saying,

"What is a person? I believe a person is an individual substance of a rational nature."

Then I tried it with this Nigerian Interior Designer.

If you really want to see the fun stuff, check this out here, for example. So if you want any evidence that the next decade of our lives will be markedly different from the prior years, these are the kind of stuff you cite. Including most of the stuff in this newsletter, to be frank.

[II]: Perplexity Labs

Perplexity recently released a research agent they are calling Labs. The first question I had was, how is that different from deep research. From their blog:

“While regular Perplexity search provides answers to specific questions, and the "Research" mode (formerly called "Deep Research") generates comprehensive analyses, Perplexity Labs goes a step further by creating complete projects with multiple components. Labs has access to more advanced tools and can generate files, presentations, images, mini-apps, and other interactive elements that aren't available in the Research mode.”

You can find example projects here, this biomedical example is particularly firm. I tried it out to plan a trip, and I was quite impressed.

Also, in my experience, Perplexity Labs is better than chatGPT/Gemini deep research because it automates the entire research process, generates actionable assets, provides better observability, & delivers structured, project-based outputs. This makes it uniquely suited for folks who need more than just (text) answers, but more of a complete, ready-to-use deliverable.

[III]: Replit X Notion

Replit agent now supports integration with Notion.

See introduction and tutorials.

It will enable products such as content management and publishing, data collection & analytics, customer support and service management even.

[IV]: Gemini 2.5 Pro Upgrade

I try the vast majority of models released by the top labs/companies, and to be clear it’s not easy to do given the sheer numbers of these models out there. But of all the models I have tried, Gemini 2.5 Pro pretty much stands out. o3 comes close, but I just hate its diction sometimes, it gets pompous and all that. About a month ago they released an upgrade, also here is a good tutorial on Gemini 2.5 Pro.

[AI + Commentary] 📝🤖📰

[I]: 🧠AI 2027: Intelligence Explosion

I recently read "AI 2027", which is one of the more talked about scenario document on AI safety. It is my recommended reading for anyone with even the slightest interest in AI forecasting and safety.

I wrote a summary and commentary about it:

Link below:

[II]: 🔐On Personal, Local, Private AI Agents

Soumith Chintala, a co-creator of PyTorch, has a really cool commentary here on (the future of) personal AI agents that are run locally and privately. He starts by explaining that his interest was sparked by his positive experience with an AI news aggregator and his work in robotics. Chintala argues that for an AI agent to be truly useful, it needs access to a person's complete and personal context, something that is difficult to achieve with current cloud-based solutions due to privacy and data access limitations. Chintala advocates for running these personal agents locally, on a device like a Mac Mini at home. What I like about his commentaries are the clear eyed justification for personal, local, private AI agents.

[III]: 💬Secret Chatbot Use Causes Workplace Rift

“More employees are using generative AI at work and many are keeping it a secret.

Why it matters: Absent clear policies, workers are taking an "ask forgiveness, not permission" approach to chatbots, risking workplace friction and costly mistakes.

The big picture: Secret genAI use proliferates when companies lack clear guidelines, because favorite tools are banned or because employees want a competitive edge over coworkers.

>Fear plays a big part too — fear of being judged and fear that using the tool will make it look like they can be replaced by it.

By the numbers: 42% of office workers use genAI tools like ChatGPT at work and 1 in 3 of those workers say they keep the use secret, according to research out this month from security software company Ivanti.

>A McKinsey report from January showed that employees are using genAI for significantly more of their work than their leaders think they are.

>20% of employees report secretly using AI during job interviews, according to a Blind survey of 3,617 U.S. professionals.

…”

Source: Axios. Interesting, and unsurprising findings in the piece on genAI usage at work.

The speed of change is so much that, I wonder how many "leaders" are able to keep up, talk less of writing useful guidelines. Keeping up is *literally* a day job.

[IV]: 🛍When AI Does the Shopping for You

The next wave of commerce would be agentic.

From malls to website to apps, and now agents, and this essay argues correctly for the case, with a more developed thesis than some other essays I have read on the subject matter.

A few things to add would be that the developer’s toolkits for agentic commerce has been growing, e.g. see MCP payment integration with stripe. Also, see stripe documentation for agentic payment.

[Screenshots] 📝🤖📰

[X] 🎙 Podcast on AI and GenAI

(Additional) podcast episodes I listened to over the past few weeks:

Please share this newsletter with your friends and network if you found it informative!