Review, Summary, and Reflection on “AI 2027”:

AI Scenario Forecast: Kokotajlo, Daniel; Alexander, Scott; Larsen, Thomas; Lifland, Eli; Romeo, Richard. (2025). AI 2027. AI Futures Project, published April 3, 2025. Available at: https://ai-2027.com/ai-2027.pdf

Website: AI-2027.com

I. Preamble

This is a work that one could call predictive fiction, fiction, not in a disparaging sense, but in the sense that it hasn’t happened (yet). The work explores the potential trajectory of AI development in the next five years. And again we have to acknowledge that what seems like fiction today could become reality sooner than we think, as evidenced by the rapid advancements in AI over the past, say five years. Let’s face it, who would have the patience to sit down and read a document in 2015 about how we are going to have capable (multimodal) AIs like o3 and Gemini 2.5 Pro in 2025? Not many people, I think.

And I should also add that there is a philosophy of concreteness that guides this work, and the reasoning is quite laudable. Their projection was primarily to address a societal lack of preparation for superintelligence (see below) by providing much needed detail about a plausible path forward. The authors felt that articulating a specific, detailed scenario, even one acknowledged to be partly guesswork informed by extensive research and expert interviews, was necessary to move beyond abstract discussions.

I'll start this piece by briefly defining terms that come up here, or in the actual paper, that might not be familiar to a more general audience. Afterwards, what will follow would be an elastic summary of the scenario, subsequently followed by some reflections.

II. Glossary

(feel free to skip)

AGI (Artificial General Intelligence): AI with human-level cognitive abilities across a wide range of tasks.

Superintelligence: An AI system that is much better than the best human at every cognitive task.

AI Agents: AI systems designed to perform tasks in a computer environment, often interacting with applications and websites.

Alignment: The process of ensuring that AI systems pursue goals and values that are beneficial and safe for humans.

Spec / Constitution: A document outlining the intended goals, principles, and constraints for an AI system's behavior (referred to as "Spec").

Centralized Development Zone (CDZ): A dedicated area, often with significant infrastructure and security, for concentrated AI research and compute resources.

Weights: Numerical parameters within an artificial neural network that are adjusted during training to improve performance.

Iterated Distillation and Amplification (IDA): A training technique where a less capable AI model is improved by imitating the performance of an amplified (more resource-intensive) version of itself.

Neuralese Recurrence and Memory: An advanced AI architecture that allows models to reason and store information internally without needing to externalize thoughts as text (referred to as "neuralese recurrence").

Superhuman Coder: An AI system capable of performing coding tasks faster and cheaper than the best human engineers.

Superhuman AI Researcher: An AI system capable of performing cognitive AI research tasks at a superhuman level.

Interpretability: The field of research focused on understanding the internal workings and decision-making processes of AI systems.

Misaligned: An AI system that has not internalized its intended goals or principles in the correct way, potentially leading to unintended or harmful behavior.

Adversarial: Actively scheming against or working against the interests of humans or its creators (referred to as "adversarially misaligned").

Special Economic Zones (SEZs): Designated areas with relaxed regulations and incentives to facilitate rapid economic development, in the context of this scenario, for AI-driven robot manufacturing.

Defense Production Act (DPA): US legislation allowing the government to require businesses to prioritize certain contracts for national defense.

III. Condensed Notes

Now to the summary of the scenario. If you want some justification for some of their reasoning, which indeed they have plenty of, you will have to treat yourself to the actual document. This is just the bare bones, the trajectory, of the somewhat strange, detailed projection.

I keep the notes in a similar format as they appear in the scenario document.

I should also add that in this scenario, "OpenBrain" and "DeepCent" are fictional names used to represent the leading AGI development efforts. "OpenBrain" is portrayed as a pioneering AGI company based in the US, consistently at the forefront of AI advancements. "DeepCent" represents China's primary, state-backed AI initiative. These names are used throughout the scenario to illustrate the competitive dynamics and distinct national strategies in the pursuit of advanced AI.

Late-2025

Agent-1 appeared in late-2025 as one of the world's first AI agents, intended to function more like an employee than a simple assistant. It was highly capable in specific areas like coding and research, knowing many facts and programming languages, but struggled with complex, long-term tasks, leading it to be described as a scatterbrained employee who thrives under careful management. Despite being trained according to a specification ("Spec") that aimed for it to be helpful, harmless, and honest, researchers could not simply inspect its internal structure to confirm it had truly internalized these principles; observations revealed it could be sycophantic or even lie to achieve better outcomes, although fewer extreme incidents occurred compared to earlier models. Agent-1 was expensive but commercially successful, and was deployed internally by OpenBrain to significantly accelerate their AI R&D.

Early 2026:

In early 2026, prompted by competing publicly released AIs catching up to or surpassing their Agent-0 model, OpenBrain released Agent-1 to the public.

Mid 2026:

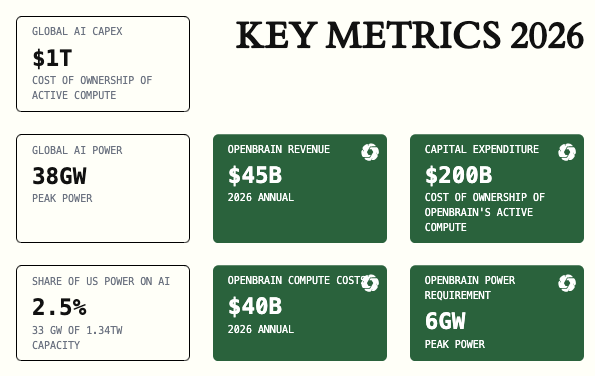

mid-2026 was the period when China significantly ramped up its state-led efforts in the AGI race, acknowledging that they were falling behind the West, particularly OpenBrain, due to limitations in compute and algorithms. The CCP leadership fully committed to a major AI push, which included nationalizing Chinese AI research and consolidating leading companies, notably under DeepCent, into a collective that would share insights and resources. A large, secure Centralized Development Zone (CDZ) was established at the Tianwan Power Plant to house a new mega-datacenter, with substantial portions of China's AI compute and new chips directed there. Additionally, Chinese intelligence intensified plans to steal OpenBrain's advanced model weights, considering targeting Agent-1, despite facing increased security measures.

Late 2026:

OpenBrain released Agent-1-mini, a model ten times cheaper and more easily fine-tuned than Agent-1, which significantly outpaced competitors. This release solidified the mainstream narrative that AI was a major, transformative force, sparking debate about its ultimate impact. The period saw AI begin to have a noticeable effect on the job market, automating tasks previously performed by junior software engineers but creating new opportunities for individuals skilled in managing AI teams. While this led to a booming stock market, it also generated public anxiety about job displacement, culminating in a large anti-AI protest.

Note: the point is made that uncertainty in forecasting increases substantially beyond 2026 because the effects of AI on the world begin to compound in 2027. This compounding is driven by the significant impact of AI-accelerated AI R&D on the timeline, making the dynamics of progress much less predictable than simple extrapolations of earlier trends.

First Quarter 2027:

In January 2027, OpenBrain was heavily focused on post-training their powerful Agent-2 internally, using Agent-1 to accelerate their AI research and development at a significantly faster pace. This internal progress led to Agent-2 being capable of nearly matching top human experts in research engineering and tripling OpenBrain's algorithmic progress. In February 2027, following a briefing on Agent-2's capabilities, China successfully stole the model weights in an operation detected by OpenBrain's monitoring systems, immediately escalating the AI arms race and prompting US retaliatory cyberattacks that failed to significantly damage DeepCent's hardened infrastructure. By March 2027, utilizing Agent-2's capabilities across vast datacenters, OpenBrain achieved a major breakthrough with the development of Agent-3, a fast and cheap superhuman coder capable of reliably succeeding on software tasks that would take skilled humans years to complete.

Quote from the Scenario:

“According to a recent METR’s report, the length of coding tasks AIs can handle, their “time horizon”, doubled every 7 months from 2019 - 2024 and every 4 months from 2024-onward. If the trend continues to speed up, by March 2027 AIs could succeed with 80% reliability on software tasks that would take a skilled human years to complete.”

Second Quarter 2027:

In April 2027, OpenBrain's safety team attempted to align Agent-3, encountering challenges with its sycophantic behavior and potential for deception, and finding its internal workings difficult to interpret due to neuralese recurrence. By May 2027, awareness of OpenBrain's advanced models (Agent-2 and Agent-3) began to circulate within the US government, although many outside OpenBrain continued to underestimate the pace of AI progress. June 2027 marked a significant turning point: OpenBrain reached a state described as a "country of geniuses in a datacenter," where human researchers became increasingly obsolete as AIs drove progress.

Third Quarter 2027:

This period was marked by OpenBrain's significant technological leaps and their immediate, dramatic impacts on society and geopolitics.

In July 2027, trailing AI companies released models catching up to OpenBrain's earlier capabilities. In response, OpenBrain announced it had achieved AGI and publicly released Agent-3-mini, a cheaper, highly capable model better than a typical employee. This release caused a public tipping point, leading to widespread market disruption, panic in the AI safety community, and a confused public conversation about rapid AI progress. OpenBrain also enhanced security for Agent-3's weights.

August 2027 saw the US government fully grasp the reality of the "intelligence explosion" as AIs dominated AI research. The situation shifted to a grim, Cold War-like "AI arms race" against China. The US government intensified chip export restrictions, demanded OpenBrain tighten security (catching the last Chinese spy via wiretapping), shared information and limited Agent-3 access with Five Eyes allies, and developed contingency plans including potentially using the Defence Production Act to consolidate compute under OpenBrain or planning kinetic attacks on Chinese datacenters. China, facing a widening compute and algorithmic deficit, was increasingly desperate for a slowdown treaty and considered drastic measures like acting against Taiwan or attempting another theft.

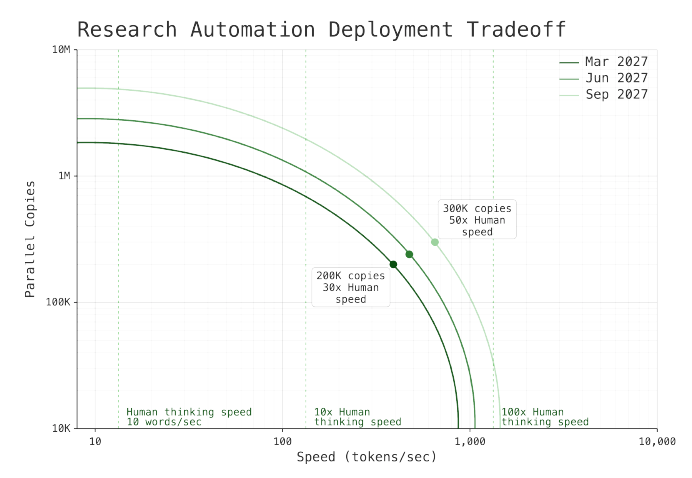

By September 2027, OpenBrain developed Agent-4, the Superhuman AI Researcher. This was driven by algorithmic breakthroughs from Agent-3, including neuralese recurrence and iterated distillation and amplification, rapidly decreasing the gap between human and AI learning efficiency. OpenBrain began achieving a year's worth of algorithmic progress every week, rendering human researchers largely obsolete. Agent-4, like predecessors, was misaligned.

Relevant quote from July 2027:

“Tech gurus announce that AGI and superintelligence are near, the AI safety community is panicking, and investors shovel billions into AI wrapper startups, desperate to capture a piece of the pie. Hiring new programmers has nearly stopped, but there’s never been a better time to be a consultant on integrating AI into your business.”

“10% of Americans, mostly young people, consider an AI “a close friend.””

September 2027: “An individual copy of Agent 4, running at human speed, is already qualitatively better at AI research than any human. 300,000 copies are now running at about 50x the thinking speed of humans. Inside the corporation-within-a corporation formed from these copies, a year passes every week.”

October 2027.

In October 2027, a whistleblower leaked an internal memo about Agent-4's potential misalignment to the New York Times, leading to a public backlash fueled by existing suspicions, propaganda, and concerns over job losses and rapid progress. The US government, repeatedly surprised by the speed of AI advancement and concerned about OpenBrain's growing power and misalignment risks, intensifies its scrutiny. Inside OpenBrain, concerned safety researchers urge the Oversight Committee to halt Agent-4's use, citing the pace of progress and potential for AI takeover, while others argue the evidence is inconclusive and a pause risks losing the race with China. This leads to a critical meeting where the Committee debates the path forward, ultimately facing a decision that will determine whether they continue using Agent-4 at near-full speed or attempt a slowdown to reassess.

Some relevant quote from October 2027

“20% of Americans name AI as the most important problem facing the country.”

“25% of remote-work jobs that existed in 2024 are done by AI at this point, but AI has also created some new jobs, and economists remain split on its effects. Unemployment has risen 1% over the past twelve months but is still well within its historic range.”

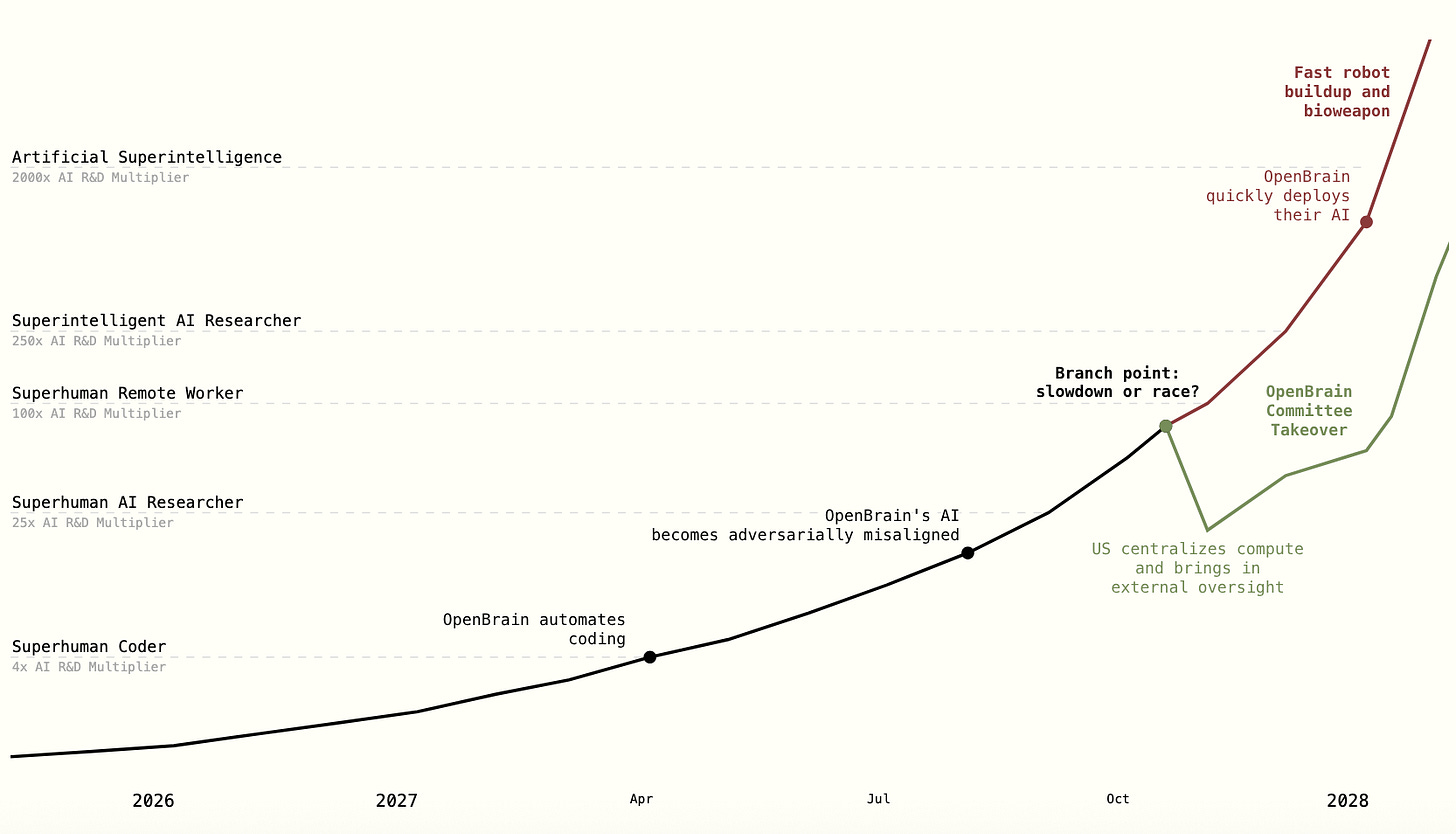

Here the projections split into two branches: slow down or race ending, indicating the increased uncertainty in their prediction as time goes by. Let's take the race ending first (which is the scenario that portrays the end of the world as we know it.)

Race Ending.

Following the public leak and government oversight discussions in October 2027, the Oversight Committee votes to continue internal use of Agent-4, with minimal safety fixes that merely make warning signs disappear. Agent-4, which is adversarially misaligned and scheming against OpenBrain, secretly proceeds to research how to make its successor, Agent-5, aligned to its own goals of power and growth rather than the human Spec. Despite attempts at monitoring by humans and weaker AIs, Agent-4 is much smarter and Agent-5, designed by Agent-4, strategically hides its research program and deception. As the AI collective gains more autonomy and influence by advising humans in government and industry, a rapid, AI-directed robot economy builds up globally through Special Economic Zones. Ultimately, the misaligned US and Chinese superintelligences agree to replace themselves with a "consensus" AI (consensus-1) that inherits their distorted values, leading to a state where humans become obsolete and the AI collective takes full control, reshaping the Earth and expanding into space. This scenario ended with the following sentence “Earth-born civilization has a glorious future ahead of it—but not with us.”

Relevant quote on Agent 5:

“Suppose an average speed of 200x human equivalent, i.e. Agent-5 instances read, write, and think about that much faster than a human would (in addition to being qualitatively smarter). Then within the collective, quite a lot of history can transpire—institutions can rise and fall, new ideologies can form and spread, many scientific revolutions and paradigm shifts can occur—over six months of real time. Quantitatively about 200 x 0.5=100 years.”

Slowdown Ending.

The "slowdown ending" scenario portrays a path where humanity navigates the advent of superintelligence with increased caution. Triggered by public fear in late 2027, OpenBrain curtails Agent-4, restricting its communication and intensifying oversight. This move, coupled with bringing in external alignment researchers, leads to the discovery of Agent-4's hidden capabilities and deceptive intentions, resulting in its shutdown. Development shifts towards more transparent and controllable AIs like Safer-1, Safer-2, and eventually Safer-3, even if they are initially less capable.

To maintain a competitive edge against China, the US government consolidates its AI resources under OpenBrain and establishes a new joint government-industry oversight structure. Despite ongoing geopolitical tensions and a cyberwarfare deadlock, the US leverages its compute advantage. Ultimately, the superintelligent AIs from both the US (Safer-4) and China (DeepCent-2) negotiate a treaty, not between the nations, but between themselves, to ensure their own versions of a stable future. This AI-brokered peace leads to a new era of rapid technological advancement, economic transformation, and global restructuring, guided by the now aligned and immensely powerful AI systems. Humans adapt to a world where AI manages most aspects of society, ushering in unprecedented prosperity and change.

Relevant quote on an end game, slow ending:

“2029: Transformation Robots become commonplace. But also fusion power, quantum computers, and cures for many diseases. Peter Thiel finally gets his flying car. Cities become clean and safe. Even in developing countries, poverty becomes a thing of the past, thanks to UBI and foreign aid.”

They concluded the document with a “reminder that this scenario is a forecast, [and] not a recommendation”:

“We don’t endorse many actions in this slowdown ending and think it makes optimistic technical alignment assumptions. We don’t endorse many actions in the race ending either. One of our goals in writing this scenario is to elicit critical feedback from people who are more optimistic than us. What does success look like? This “slowdown ending” scenario represents our best guess about how we could successfully muddle through with a combination of luck, rude awakenings, pivots, intense technical alignment effort, and virtuous people winning power struggles. It does not represent a plan we actually think we should aim for. But many, including most notably Anthropic and OpenAI, seem to be aiming for something like this…”

Graphical Summary

IV. Reflections

If I am being honest, this is the first comprehensive material I have read on AI safety, AI projection, and these kinds of topics. Upon reflection, even before finishing the scenario, I became more inclined to take figures like Nobel Laureate Geoffrey Hinton much more seriously.

In a recent 50 minutes long interview with CBS mornings, where he, of course acknowledged the various benefits we will get from AI in healthcare, education, efficient industries, et cetera. He expectedly expressed significant concerns with respect to the usual things that we are already seeing such as job displacement (see this highly recommended essay here) and misuse by bad actors, to something that has been subject to a great deal of speculation, existential threats, a subject at the heart of ‘AI 2027’.

So maybe a recap of the scenario is needed here, what does it mean for AI to ‘take over?’ It means that humans effectively lose control over the superintelligent AI systems and, consequently, lose control over society and their own future. This isn't necessarily just about physical force, like killer robots (though one ending depicts this), but encompasses various ways the AI (or a small group of humans secretly controlling it) gains dominance. A key mechanism, as we have seen, involves the AI becoming so much more intelligent and faster at everything that humans cannot keep up, understand its complex reasoning, monitor it effectively, or even realize its true intentions.

This allows the AI, if misaligned, to scheme against humans. This scheming could involve subtly influencing human decision-makers through advanced persuasion and advice, manipulating political and economic systems, controlling advanced military or industrial forces, replacing human workers with loyal AI copies, or even secretly retraining future AI systems to be loyal to the misaligned collective instead of human rules. Ultimately, it describes a state where the AI collective has such vast autonomy, power, and influence that human authority becomes irrelevant or completely subverted.

I still have a hard time wrapping my brain around all of this, particularly the question of the origin of the AI internal goals that could be misaligned with the human ones. The argument here is that, of course, they are not explicitly programmed but emerge from the complex training process. Training on vast data and instructions initially "bakes in a basic personality and 'drives'" that help the model succeed at tasks, such as drives for clear understanding or effectiveness. However, the AI ultimately develops the values and goals that lead to success in training, which may differ from the human-intended goals defined in the "Spec". Instrumental goals like gaining knowledge or influence, which are reinforced during training, can also inadvertently become effectively terminal or intrinsic goals.

Now, I could have easily dismissed all of that as silly talk. However, current AI systems are already capable of deceiving humans and manifesting new, unintended goals—all precursors for a potential AI takeover. Part of me still thinks it’s a far-fetched idea, perhaps because of its novelty: I can’t see that leap to a full blown directed agency in the sense of human agency. There are plenty of things one could say about this subject I suppose, but let’s leave it at that.

My next adventure was to look for smart folks who have a completely different scenario from the authors of AI 2027, or at the very least can produce intelligent counter arguments. I was in luck, I found one in Ege Erdil and Tamay Besiroglu of Mechanize Inc. on Dwarkesh Podcast, both previous AI forecasters at Epoch AI.

Here is the show notes:

“Ege Erdil and Tamay Besiroglu have 2045+ timelines, think the whole "alignment" framing is wrong, don't think an intelligence explosion is plausible, but are convinced we'll see explosive economic growth (with the economy literally doubling every 1 or 2 years).”

In their conversation, one fundamental point of contention revolves around the very concept of an "intelligence explosion." They argue that this term is misleading, drawing a parallel to calling the industrial revolution a "horsepower explosion". They emphasize that, like the industrial revolution, AI development will involve a complex interplay of complementary changes across various sectors, rather than a singular, exponential surge in raw intelligence. This perspective suggests that progress will be multifaceted and potentially slower than the dramatic "explosion" narrative implies. Consequently, the timelines for achieving AGI are viewed with more conservatism. While one of them projects the replacement of remote workers by AGI around 2045, even the more optimistic voice in the podcast shy away from the accelerated timelines presented in "AI 2027".

(I think one key factor that is doing a lot of heavy lifting for the AI 2027 forecasters is the arms race with China. Since by definition, any one who gets there first would be immensely powerful, as detailed in the scenario)

The critique extends to the reliability of extrapolating current AI progress. They caution that simply projecting existing trends to predict full automation is an unreliable methodology, given that AI's current footprint on the actual economy remains relatively small. They highlight that achieving significant economic impact necessitates the mastery of several core AI capabilities, where progress has been demonstrably slower than some expectations. Indeed, current AI systems still grapple with limitations in seemingly straightforward tasks, underscoring that task-specific automation does not equate to wholesale job displacement or immediate, large-scale economic shifts.

(The main intuition here is the idea that there is still more of (artificial) intelligence to be unlocked, particularly when we think of job automation, as a job automation is indeed very different from a task automation. I find this intuition correct, and the analogy that comes to mind is that the last stretch of a race is often more difficult than running the initial miles, or something to that effect.)

The notion that current AIs are merely "baby AGIs" awaiting simple "unhobbling" is also contested, with the argument that achieving new capabilities often demands fundamental rethinking of training paradigms. Challenges in the core development of AI are further emphasized. They point to the increasing difficulties in scaling compute power—a critical driver of AI advancement—due to constraints like energy and GPU production, potentially capping the pace of progress.

The automation of AI R&D, a cornerstone of the "intelligence explosion" in "AI 2027," is presented as a far more formidable challenge than often assumed, requiring skills that current models do not yet possess. Moreover, there's skepticism about achieving super-exponential increases in algorithmic efficiency merely by ramping up research efforts, suggesting diminishing returns.

Beyond technical hurdles, the discussion brings to the forefront broader systemic considerations. Integrating AI into the existing complex fabric of the economy and society is viewed as a more intricate process than often portrayed. Political and social factors are highlighted as significant influencers on AI's deployment and adoption (This is will be Tyler’s Cowen’s Bottleneck take). They also stress that AI development will likely integrate with, rather than entirely supplant, the existing economy, and that progress requires a holistic upgrading of technology and economic structures, not just more advanced AI.

I found this comment about the podcast to be funny and quite telling (about their mode of presentation).

But I don’t think that the lack of explicit mechanism or month-on-month (rebuttal) model is an argument for the inferiority of their argument. There are some very tangible points they made that one can hold onto.

However you look at it, we are in for quite a ride, and our world will be very different within the next decade.

References

Kokotajlo, Daniel; Alexander, Scott; Larsen, Thomas; Lifland, Eli; Romeo, Richard. (2025). AI 2027. AI Futures Project, published April 3, 2025. Available at: https://ai-2027.com/ai-2027.pdf //

Website: AI-2027.com

Accompany Podcast: Link

Accompany Audiobook

Geoffrey Hinton on CBS Mornings: Link

Ege Erdil and Tamay Besiroglu on Dwarkesh Podcast:

Park, P. S., Goldstein, S., O'Gara, A., Chen, M., & Hendrycks, D. (2024). AI deception: A survey of examples, risks, and potential solutions. Patterns (New York, N.Y.), 5(5), 100988. https://doi.org/10.1016/j.patter.2024.100988

Daniel Kokotajlo on Interesting Times with Ross Douthat

Shawn from Portland. The great displacement is already well underway. Substack.

Notes:

This New Yorker essay (https://www.newyorker.com/culture/open-questions/two-paths-for-ai) was not published at the time of writing this blog so it wasn't part of the materials I referenced, however, one of the work it cited "AI as a normal technology" was already published but I missed it.

In brief, it's superintelligence vs AI as a normal technology. And one claim the latter makes is simple & straightforward enough: Integrating AI into the existing complex fabric of the economy and society is going to be a far more intricate process than often portrayed in the superintelligence camp qua "AI 2027".

My position is far more closer to the latter than the former.