I had planned to publish this newsletter a couple of weeks ago, but new models and products keep rolling out. It’s hard keeping up with the pace.

Story of my life:

I often wonder how quickly these technologies will actually diffuse; my hunch is that adoption will be slow even as innovation races ahead (attention is scarce, after all).

Anyways, this marks my 31st Pulse entry, 31 months of actively tracking this new era of generative AI on this blog.

(To my American readers: Happy Thanksgiving!)

[LLM/AI for Science et al] 🤖 🦠 🧬

Kosmos: An AI Scientist for Autonomous Discovery

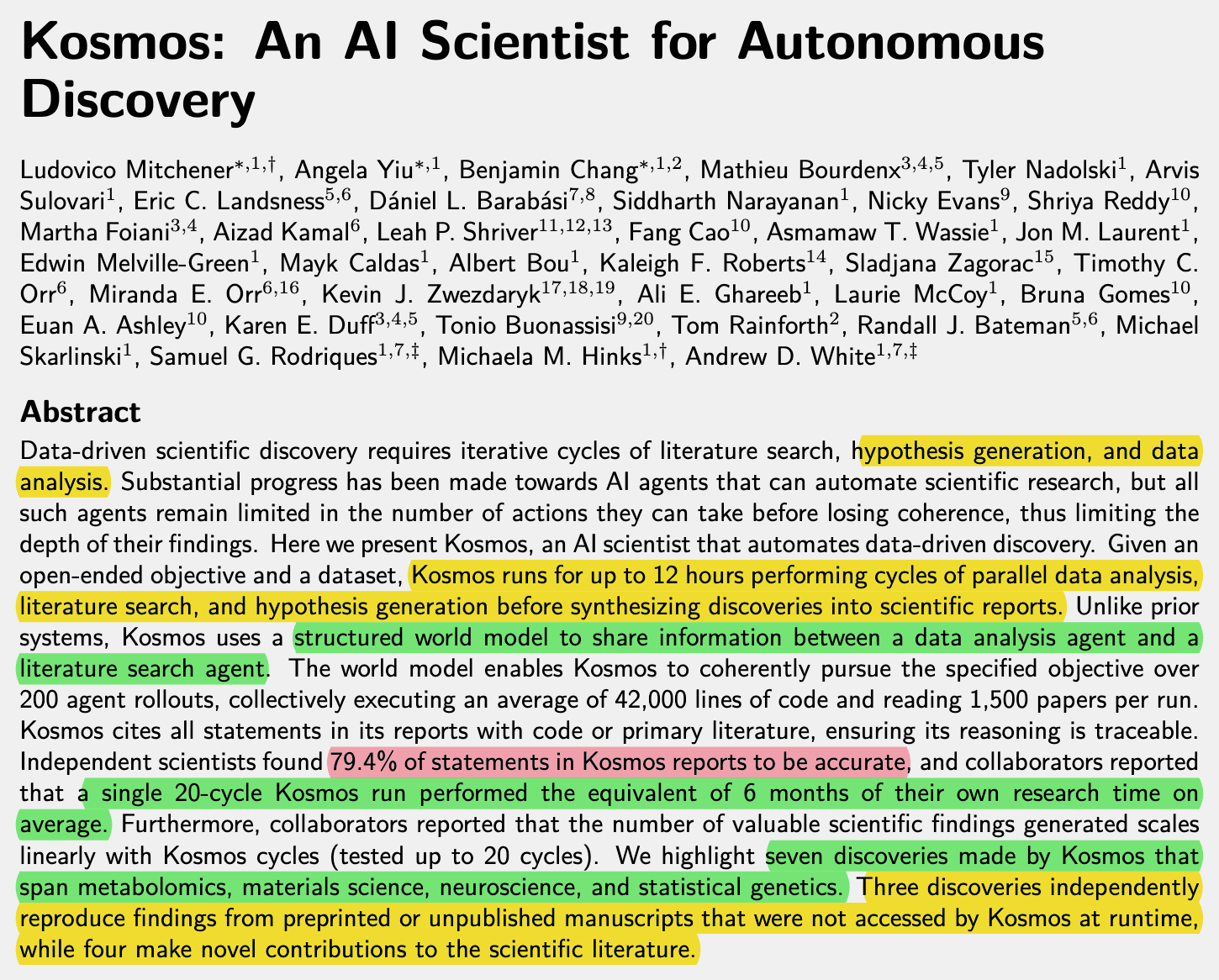

I have followed Future House’s work since its inception, and since there is so much you can do to stay up-to-date about AI these days, I use their progress (and Ai2’s) as the barometer for AI for Science progress. They recently released Kosmos, a successor to Robin, and it’s powerful.

See their blog here, paper here.

“Our beta users estimate that Kosmos can do in one day what would take them 6 months, and we find that 79.4% of its conclusions are accurate…The most surprising part of our work on Kosmos — for us, at least — was our finding that a single Kosmos run can accomplish work equivalent to 6 months of a PhD or postdoctoral scientist. Moreover, the perceived work equivalency scales linearly with the depth of the Kosmos run, providing one of the first inference-time scaling laws for scientific research. We were very skeptical when we first got this result. Here, we lay out why we think the statistic is valid.”

Kosmos is also available on their new platform, Edison Scientific, which replaces the Future House platform. I was one of the beta testers on the earlier platform.

LSM-MS2: Spectral Identification Model for raw MS2 Data

Matterworks has announced LSM-MS2, a spectral identification model now available in the Pyxis cloud platform for mass spectrometry researchers. LSM-MS2 leverages a vast curated reference library—1.8 million spectra of 99,000 analytes—and delivers state-of-the-art performance in metabolite identification, including a 30% boost in distinguishing tricky isomeric compounds versus previous methods, with typical studies wrapped up in just 20 minutes. The platform integrates transparent scoring, interactive spectral validation, and exportable results, all accessible without coding expertise. This is AI-powered metabolomics — ushering in a new era of scalable, intuitive small molecule analysis.

AI That Learns the Grammar of Molecules

This is from over two years ago, but I am including this because it’s the first time I am reading about it, and I am working on a chemistry fine tuning project trying to use the model: IBM Research released MoLFormer-XL circa 2022, an AI foundation model designed to transform molecular discovery workflows. Trained on over 1.1 billion molecules represented by simple SMILES text strings, MoLFormer-XL leverages transformer-based architecture and efficient training strategies to infer molecular structure and predict properties such as solubility, antiviral activity, and quantum energy bandgaps—without relying on costly 3D structural data. This model outperforms previous chemical language and graph-based models (I will presume at the time, as I am not sure if there are superior chemical language models now available.) Paper and Code.

AI and the Republic of Science

This essay from the Cosmos Institute explores the promise and pitfalls of AI’s integration into scientific discovery. Envisioning a near-future where robotic labs autonomously hypothesize and experiment, the article questions not just AI’s ability to accelerate research but whether such automation redefines what science fundamentally is. The core argument pivots on science’s dual nature: it is both a method for uncovering truths and a social practice, requiring consensus, debate, and peer judgment—a tradition Michael Polanyi called the “Republic of Science.” Can AI become more than a tool—perhaps even a citizen in science’s social order? Does AI solve the real causes of scientific stagnation, or risk optimizing the wrong incentives? Highly related, and more detailed essay on the subject matter from “AI as a Normal Technology” newsletter - Could AI slow science.

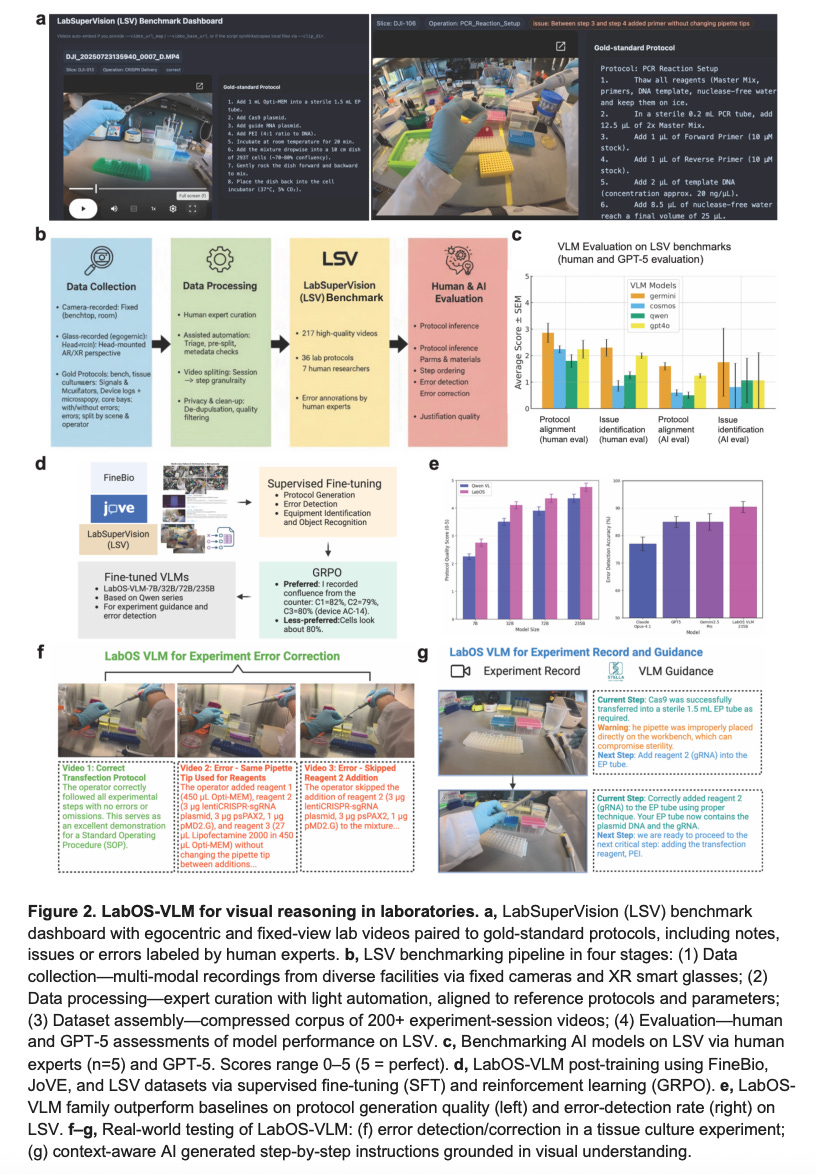

LabOS: The AI-XR Co-Scientist That Sees and Works With Humans

“Modern science advances fastest when thought meets action. LabOS represents the first AI co-scientist that unites computational reasoning with physical experimentation through multimodal perception, self-evolving agents, and XR-enabled human-AI collaboration. By connecting multi-model AI agents, smart glasses, and human-AI collaboration, LabOS allows AI to see what scientists see, understand experimental context, and assist in real-time execution. Across applications—from cancer immunotherapy target discovery to stem-cell engineering— LabOS shows that AI can move beyond computational design to participation, turning the laboratory into an intelligent, collaborative environment where human and machine discovery evolve together.”

[AI/LLM Engineering] 🤖🖥⚙

Research Agent with Deep Agents

I have written about LangChain’s open-source project, Deep Agents, in previous newsletters; they recently released a YouTube tutorial about some quick starts. DeepAgents is a robust agent harness designed to streamline the development of autonomous research agents. DeepAgents brings powerful functionalities like planning, computer access, tool integration, and sub-agent delegation, making it easy for developers to tailor agents for complex research tasks. The quickstart guides and UI empower users to configure, deploy, and visualize agent workflows interactively, while built-in atomic tools ensure seamless file operations, task management, and context isolation. Designed for extensibility, the package is ideal for building adaptable research workflows. I plan to use it in one of my projects.

GitHub’s AgentHQ

Launched recently, GitHub Agent HQ provides a new open ecosystem that gives developers the ability to orchestrate, oversee, and track various AI coding agents directly within the GitHub environment using a unified interface called Mission Control. This platform supports the seamless integration of agents from a diverse range of providers. It’s still not very clear to me how this differs from using a combination of say, Cursor and Codex and integrating it with GitHub with the functionalities those products provide. Walkthrough.

Windsurf Codemaps

Cognition AI recently released Windsurf Codemaps, a tool designed to radically enhance code understanding and engineering productivity. Tackling the productivity lost to context switching and onboarding in massive codebases, Codemaps leverages SWE-1.5 and Claude Sonnet 4.5 to create interactive, just-in-time visual maps of code tailored to specific tasks or questions. Unlike generic AI coding assistants chat, Codemaps will foster better comprehension and streamlined navigation, ensuring engineers remain in control even as AI takes on more work. (The product reminds me of Codeviz, aYC-backed company.) See walkthrough for codemaps. I hope this code will be Cursor soon, as I have also been using chatGPT apps + Figma Jam to visualize critical parts of my code.

Cursor 2.0

Cursor released its highly-anticipated 2.0 release late October, delivering two major upgrades for developer productivity: the introduction of Composer, an ultra-fast coding model purpose-built for low-latency agent-driven workflows, and a fully redesigned multi-agent interface. They say, Composer accelerates coding with rapid iteration and excels in navigating large codebases thanks to advanced semantic search capabilities. The new interface puts agents, not files, at the center of the experience, making it easy to run multiple agents in parallel and review or test automated changes efficiently. I really like the new browser too, it looks like a game changer for frontend development with the select element feature, computer use, et al. See walkthrough for Cursor 2.0.

Gemini RAG API

Google’s File Search Tool inside the Gemini API is a fully-managed RAG system designed to dramatically lower the barrier for grounding LLM outputs in your own documents. With this tool, developers simply create a “file search store,” upload PDFs, DOCX, TXT, JSON (and even code files), and the system handles chunking, embedding (via the Gemini Embedding model), indexing and retrieval. Runtime search and context injection are free, with the only charge being the upfront cost of embedding large volumes (~$0.15 per million tokens), as at the time of writing. The benefit? A powerful streamlined workflow: skip building and managing vector stores, retrieval infrastructure and chunking logic, instead you invoke your file search store as a tool. For startups, research teams, and anyone working with domain-specific corpora this means you can quickly spin up a production-grade knowledge-grounded assistant or analytic workflow. See walkthrough.

Project Fetch: Can AI Program a Robot Dog?

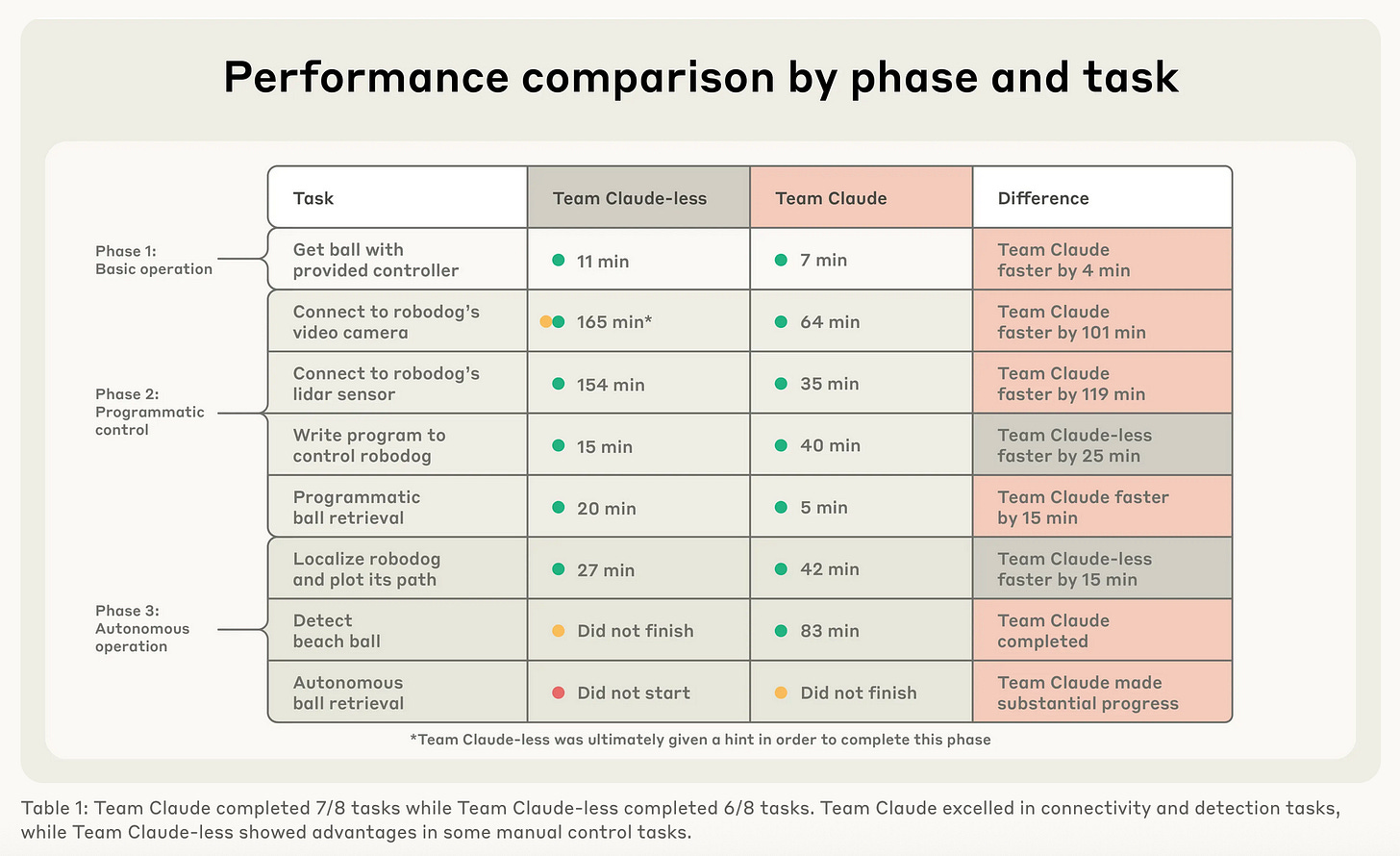

In an eye-opening new study, Anthropic tasked its advanced model Claude with helping a team of non-robotics engineers command a quadruped robot dog in what they dubbed Project Fetch. The experiment split researchers into two groups—one with Claude’s assistance, the other working solo—to perform increasingly complex tasks (starting from controller use, advancing to sensor integration and ultimately striving for autonomous fetching of a beach ball). The Claude-enabled team completed more tasks.

While the robot didn’t quite achieve fully autonomous retrieval, the results mark a clear step toward bridging digital AI agents and real-world physical systems. Importantly, the project signals not only how AI can uplift human performance in robotics, but also raises fresh questions about how models that interact with hardware might introduce novel safety and alignment considerations. See video.

LangSmith Agent Builder X Opal X AgentKit

LangSmith Agent Builder, OpenAI AgentKit and Google Opal are the next-generation, no-code agent-building platforms I have investigated over the past month. Each offers a distinct take on how teams build and deploy AI agents. LangSmith Agent Builder offers a no-code, conversation-driven setup, enabling users to describe what they want in plain language and letting the system generate prompts, connect tools, and define triggers. It supports persistent memory, sub-agents and human-in-the-loop oversight—making it ideal for internal productivity agents (e-mail summaries, chat assistants) rather than full production automation. By contrast, OpenAI AgentKit positions itself as a developer-centric, full-lifecycle agent platform: visual builder, comprehensive orchestration (multi-agent, branching logic), strong evaluation and governance tooling—geared toward organizations building mission-critical agentic systems. Meanwhile Google Opal leans into rapid prototyping and non-technical users, coupling natural-language input and drag-and-drop workflows, especially for embedding AI into the Google ecosystem (Docs, Sheets, Drive). It, however, trades off some of the deeper customization, governance and enterprise-grade orchestration found in AgentKit. In practical decisions: choose LangSmith if you want to empower business teams to spin up agents quickly with minimal code; choose AgentKit when you require full agent orchestration and production-scale governance; choose Opal when you want fast internal tools or prototypes by non-developers within the Google stack.

How Agents Use Context Engineering

Lance Martin delivers a sharp, high-level breakdown of how modern AI agents manage increasingly complex tasks by mastering their context windows. Highlighting leading frameworks such as Claude Code, Manus, and DeepAgents, the video spotlights three key principles: offload (moving context to external sources like file systems), reduce (compacting, summarizing, and filtering information), and isolate (segmenting tasks via subagents). These approaches let agents gracefully handle long, multi-step jobs with minimal lag and maximum relevance. Notes.

Meta: SAM 3D.

Meta has just released SAM 3D, a transformative addition to its Segment Anything family that generates full 3D geometry, texture, and spatial layouts from a single 2D image, effectively bridging the gap between flat pixels and physical reality. Comprising SAM 3D Objects for general items and SAM 3D Body for high-fidelity human mesh recovery, this technology is already powering Facebook Marketplace’s “View in Room” feature to visualize furniture without complex scanning; beyond e-commerce, it unlocks massive potential for game developers to instantly turn concept photos into usable assets, robotics to better navigate unstructured environments, and healthcare to perform precise biomechanical analysis from standard video feeds. See a walkthrough, and demo app.

[AI X Industry + Products] 🤖🖥👨🏿💻

Gemini 3.0, Nano Banana Pro, and Antigravity.

Google officially launched Gemini 3.0 on November 18, 2025, positioning it as their most intelligent and capable AI to date, at least as at the time of me writing this bit (Claude opus 4.5 seems to be better at least on the coding front). The new Gemini model boasts SOTA multimodal understanding, seamlessly processing text, image, audio, video, and code, and achieves top-tier performance on reasoning benchmarks like Humanity’s Last Exam. Key upgrades include a stable 1-million-token context window, a new “Deep Think” mode for enhanced problem-solving, and the introduction of Google Antigravity, an AI-native IDE.

Here is a great walkthrough from Sam Witteveen. You can vibe code apps in the AI studio with Gemini 3 for free. If you would rather want short videos overview of Gemini 3 capabilities, this playlist is for you.

Nano Banana Pro is ‘something else’.

Google Antigravity appears to be a Cursor killer, as the kids say these days. Here is a nice walkthrough of the app.

Eleven Labs Image & Video (Beta)

Eleven Labs made a pivot to multimodal creation in a significant expansion beyond its audio roots, ElevenLabs has launched “Image & Video,“ a platform that transforms the company into a comprehensive AI content suite. The update introduces a “Model Aggregation” strategy rather than relying on a single proprietary visual engine. By integrating top-tier third-party models—including OpenAI’s Sora, Google’s Veo, and Kling—into one interface, ElevenLabs allows users to leverage the specific strengths of each model to generate high-quality visuals without ever leaving the platform. Here is a full walkthrough of making an AI Ad.

NotebookLM Deep Research + Slides.

Google’s NotebookLM has transitioned from a passive analysis tool into an active research partner with two major upgrades: Deep Research and Audio/Visual Overviews (including Slides). The new Deep Research feature breaks the “upload-only” barrier, allowing the AI to autonomously scour the web for credible sources, execute multi-step research plans, and compile comprehensive reports that integrate seamlessly with your existing notes. Complementing this is the Slides feature (found in the “Studio” panel), which instantly converts your curated sources into structured presentations allowing you to move from raw research to a shareable artifact in seconds. Example from Google Research.

Grokipedia: The AI-Authored Encyclopedia

xAI has officially launched Grokipedia, a direct challenger to Wikipedia that replaces human volunteer editors with the Grok language model to generate and curate knowledge. Positioning itself as a “truth-seeking” alternative to what Elon Musk calls the “editorial bias” of traditional platforms, Grokipedia launched with nearly 900,000 articles that synthesize web data and real-time X (Twitter) conversations summaries. Unlike Wikipedia’s consensus-based model, Grokipedia utilizes a top-down, algorithmic approach where users can only “suggest” edits for the AI to review.

[AI + Commentary] 📝🤖📰

It is Still 1995

Digital Native’s Rex Woodbury reminds us that, despite the overwhelming buzz around AI and rapid developments, we’re still in the early innings, think 1995 for the internet. Drawing from historical tech cycles and adoption data, Woodbury argues there’s abundant opportunity ahead for founders, investors, and innovators. AI is just reaching its “Irruption Phase,” so the next few years will birth app-layer giants like Uber and Instagram did for mobile.

Intelligence Environments

Here, Kevin Vallier, a Professor of Philosophy explores how AI is reshaping not just what we know, but how we reason and form beliefs. As “intelligence environments” (computational systems that intervene in our cognitive processes) increasingly guide our arguments, judgments, and even virtues, Vallier warns that our autonomy and intellectual character hang in the balance. While AI can augment courage and clarity, it also risks breeding passivity, deception, and a dangerous dependence on machine-generated reasoning. Vallier calls for systems designed with contestability, transparency, and exit rights, so we retain sovereignty over our own thoughts. In the information age, we fought for access; in the intelligence age, our challenge is to preserve control over how we reason and grow.

AI as Normal Tech vs AI 2027

Asterisk Magazine publishes a rare consensus in the AI discourse: contributors from both the transformative “AI 2027” camp and the more measured “AI as Normal Technology” perspective set aside their differences to map shared ground. While the camps disagree on whether AI’s near future will resemble a slow, internet-like diffusion or a disruptive leap toward superintelligence, both agree that, before “strong AGI”, AI will act as a powerful but still normal technology. They forecast that benchmark saturation will arrive soon, yet real-world automation of everyday tasks will remain surprisingly elusive. All contributors emphasize that AI’s impact will rival, if not surpass, the internet’s; that alignment of AI systems is still deeply unsolved; and that strong safeguards, transparency, and preventative policy are crucial regardless of what timeline we’re on.

Building and AI-Native Engineering Team

AI coding agents are rapidly transforming the entire software development lifecycle. Once limited to autocomplete, today’s agents like OpenAI’s Codex can handle multi-hour reasoning, automate full feature builds, author tests, conduct code reviews, and even triage operations, effectively cutting weeks of work down to days. This guide from Open AI explores how engineering teams can leverage AI agents for planning, design, build, testing, review, documentation, and deployment, enabling engineers to focus on high-impact strategic and architectural decisions.

AI Changes Nothing

In this refreshingly contrarian take, Dax Raad asserts that while AI may be the buzzword of the decade, it doesn’t fundamentally change what it takes to build a winning product. He argues that the most critical elements of product success—compelling marketing, frictionless onboarding, and deep retention—are still deeply human problems. AI might automate tasks or generate content, but it can’t invent “cool,” architect primitives for future-proof user retention, or recognize and deliver true “aha” moments to users. Ultimately, Raad’s thesis is both humbling and inspiring: AI tools bring new efficiencies but don’t replace the necessity for human taste, ingenuity, and relentless execution in creating beloved, enduring products.

[X] 🎙 Podcast on AI and GenAI

(Additional) podcast episodes I listened to over the past few weeks:

Please share this newsletter with your friends and network if you found it informative!

I resonate with what you wrote about the pace. How do those 'inference-time scaling laws' for scientific research realy apply?