My Other Publications:

Video Essays: The Story in Your Head, Pillars of Modern Civilization.

In this newsletter:

[LLM/AI for Science et al] 🤖 🦠 🧬

[I]: 🥼 Lila: AI Scientific Superintelligence

In the popular essay by Dario Amodei (the CEO of Anthropic) Machine of Loving Grace, he made a very interesting prediction about AI:

“My basic prediction is that AI-enabled biology and medicine will allow us to compress the progress that human biologists would have achieved over the next 50-100 years into 5-10 years. I’ll refer to this as the “compressed 21st century”: the idea that after powerful AI is developed, we will in a few years make all the progress in biology and medicine that we would have made in the whole 21st century.”

I was skeptical when I first read this prediction, and I remain so, although less so than before. It’s clear that there is a lot of movement in the AI-biology space. I’ve written extensively about Future House on this blog, a non-profit with a goal to automate scientific discovery (read more about them in the commentary section of this newsletter.) Another company I’ve learned about recently is Lila Sciences, which aims to pioneer scientific superintelligence:

“Lila is devising a new system where AI models, working in concert with human scientific ingenuity, are integrated with an autonomous platform that we call an AI Science Factory (AISF™). AISFs put experiment design, conduction, observation, and re-design in the hands of AI, removing barriers between what have traditionally been disparate areas of science. The goal: To produce new scientific knowledge to address humankind’s greatest challenges at a scale, speed, and accuracy far beyond human capacity alone.”

The New York Times recently wrote a profile of the company.

Here are some excerpts that makes Dario’s ‘basic’ predictions increasingly more believable:

“Already, in projects demonstrating the technology, Lila’s A.I. has generated novel antibodies to fight disease and developed new materials for capturing carbon from the atmosphere. Lila turned those experiments into physical results in its lab within months, a process that most likely would take years with conventional research.”

“One of those projects found a new catalyst for green hydrogen production, which involves using electricity to split water into hydrogen and oxygen. The A.I. was instructed that the catalyst had to be abundant or easy to produce, unlike iridium, the current commercial standard. With A.I.’s help, the two scientists found a novel catalyst in four months — a process that more typically might take years. That success helped persuade John Gregoire, a prominent researcher in new materials for clean energy, to leave the California Institute of Technology last year to join Lila as head of physical sciences research.”

[II]: 📄 Ai2 Paper Finder

Researchers often struggle with traditional literature search tools, which can make it difficult to find relevant papers beyond the most popular or highly cited works. This is particularly true for complex queries or when seeking niche findings/paper. Existing tools may not effectively break down intricate research questions or follow the winding path of citations that often leads to crucial, yet less prominent, discoveries, leaving researchers spending excessive time searching or potentially missing vital papers.

To address this pain point, the Allen Institute for AI (AI2) developed Paper Finder, an LLM-powered search system designed as a solution. As detailed in their blog post, Paper Finder mimics a human researcher's deeper discovery process. It intelligently breaks down complex queries, searches across diverse sources, follows citation links, and critically evaluates papers for relevance, specifically aiming to uncover those hard-to-find studies. By providing summaries explaining why a paper is relevant to the specific query, it directly solves the problem of surfacing niche but important literature often missed by conventional search methods. You can try this app here.

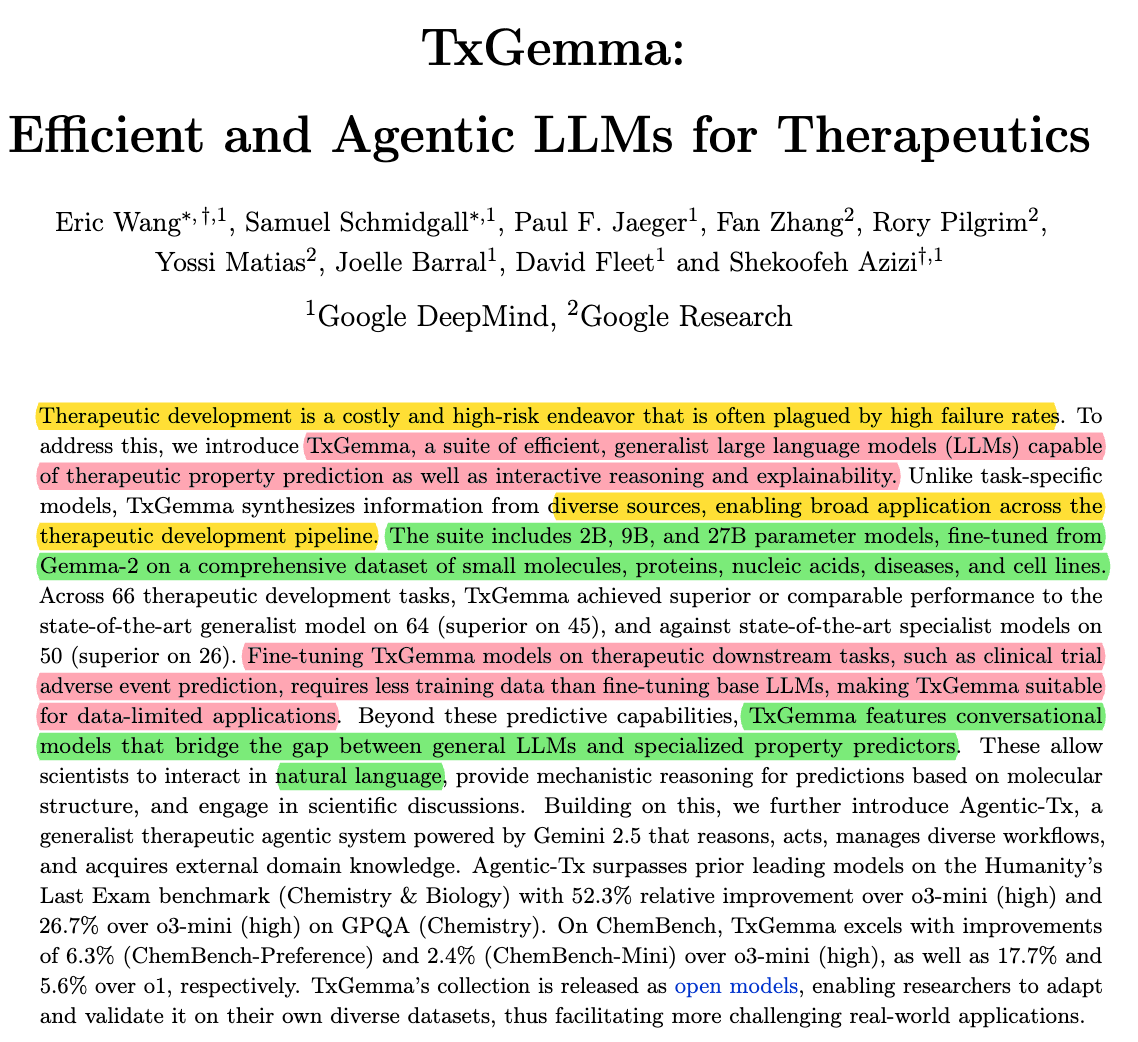

[III]: 💊 TxGemma: Efficient and Agentic LLMs for Therapeutics

Google introduces TxGemma, a new family of open models from Google designed to accelerate the often slow and complex process of therapeutic development. Building upon the foundation of Google DeepMind's Gemma models, TxGemma is specifically trained to understand and predict the properties of potential therapeutic treatments throughout the drug discovery pipeline, aiming to bring new medicines to patients faster.

TxGemma is released in three sizes (2B, 9B, and 27B parameters) to suit different computational needs, with specialized 'predict' versions for specific tasks and 'chat' versions optimized for conversational data analysis. Notably, these models can be integrated into larger agentic systems to tackle complex research challenges. To foster innovation and adoption within the research community, Google has made TxGemma openly available via platforms like Vertex AI Model Garden and Hugging Face, encouraging developers to fine-tune and adapt the models for their unique therapeutic data and discovery workflows.

You can read the paper here, and watch the announcement here.

[AI/LLM Engineering] 🤖🖥⚙

[I]: ⚙Amazon’s Nova Act

Amazon recently introduced Nova Act, a new AI model designed to enable developers to build agents capable of performing actions within web browsers. This research preview, accessible through the Amazon Nova Act SDK, allows for the creation of AI agents that can automate tasks like filling out forms, making online purchases, and scheduling appointments.

The Nova Act SDK allows developers to break down intricate workflows into smaller, more manageable commands and integrate Python code for added customization, including testing and parallelization. The model has shown strong performance in internal evaluations against comparable models. See X Thread & Code base.

[II]: 💻LangGraph CUA

This tutorial introduces LangGraph Computer Use Agents, a library designed to simplify the creation of CUA (built on top of LangGraph). The demonstration highlights its ability to interact with websites lacking direct APIs. The library requires an OpenAI API key and a Scrapybara API key, the latter providing virtual desktops for the AI agents.

The tutorial walks viewers through the installation process and demonstrates how to create and visualize a Langraph agent using a Jupyter Notebook. It showcases the agent's ability to navigate websites within a virtual machine, responding to user input. Langsmith is utilized for observability. Also see code base.

[III]: 📂Understanding MCP from Scratch

Here is one of the clearer video tutorial I watched lately on understanding Model Context Protocol (MCP), a system designed to enhance LLM by connecting them with external tools and context across various AI applications. MCP functions as a client-server protocol where AI applications (clients) can discover and utilize tools, resources (like documents), and prompts made available by an MCP server. This standardized approach aims to simplify the integration process, allowing different AI platforms to seamlessly access shared capabilities.

The tutorial demonstrates MCP's utility with a practical example: building a "LangGraph query tool". It guides viewers through creating an MCP server using a Python SDK, defining the necessary tools and resources, and then shows how to connect this server to applications such as Cursor, Windsurf, and Claude Desktop by adjusting their configurations. Ultimately, MCP offers a unified interface for augmenting AI applications, making it easier to leverage external functionalities and information consistently across different platforms. (You could also say MCP is like a magic wand for LLMs). See the tutorial notes.

For example, see ElevenLabs MCP in action.

[AI X Industry + Products] 🤖🖥👨🏿💻

[I]: 🍎Apple’s AI Doctor

With some news out there about Apple building an “AI Doctor”/health coach, I believe Apple is poised to become a leader in AI-enabled health market in the coming years. Given their significant penetration in the wearable market with the Apple Watch and their established platform and network, they are well-positioned to deliver AI-driven health advancements that will benefit a wide range of users.

In short, the market penetration is huge: >30 million Apple smartwatch users in the US.

So are their capabilities: Stanford apple heart study, Apple watch accuracy for cardiovascular measurements, GAPcare II Study, accuracy in AFib detection.

Apple will push further into healthcare with an AI-driven doctor -- a kind of health coach that would be integrated with the company's mobile devices.

As reported by Mark Gurman at Bloomberg, the initiative is called Project Mulberry and could yield a version for consumers as early as next year and launch with a future version of iOS. It would reportedly offer advice on food habits and exercise techniques, and use data that Apple already collects from Apple's Health app and hardware including the Apple Watch.

It's no secret that Apple's CEO Tim Cook has been promising that the company's long-term plans include a big push into more health-related technologies.

More.

[II]: 🧑💻️Gemini 2.5: Our most intelligent AI model

Google has unveiled its most intelligent AI model to date: Gemini 2.5. The initial release, an experimental version of 2.5 Pro, has already achieved state-of-the-art results across numerous benchmarks. These Gemini 2.5 models are designed as "thinking models," meaning they are capable of reasoning through their thoughts before providing a response, leading to greater accuracy and performance.

The Gemini 2.5 Pro is currently accessible through Google AI Studio and the Gemini app for Gemini Advanced users, with integration into Vertex AI planned for the near future. This model excels in complex tasks, holds the top position on the LMArena leaderboard as at the time of my writing this sentence, and showcases robust reasoning and coding abilities. Building upon the strengths of its predecessors, Gemini 2.5 offers native multimodality and a large context window, currently at 1 million tokens with plans for expansion to 2 million.

See Blog, Sundar’s tweet. Also see demos: from JavaScript animation of complex flocking behavior to visualization of a Mandelbrot set

[III]: 🖼4o Image Generation & Midjourney v7

OpenAI has integrated its most advanced image generator into GPT-4o, aiming to make image generation a core capability of their language models. This new feature, as OpenAI highlights, focuses on creating not just aesthetically pleasing images but also practical and useful visuals. GPT-4o excels at rendering text accurately within images, precisely following user prompts, and leveraging its knowledge base and chat context to generate relevant and coherent visuals, even transforming uploaded images or using them as inspiration.

The improved capabilities stem from training the models on a vast dataset of online images and text, allowing them to understand the relationship between the two and between images themselves. This results in a model with enhanced visual fluency, capable of generating consistent and context-aware images. OpenAI emphasizes the utility of this technology for visual communication, enabling the creation of logos, diagrams, and other informational graphics with precise meaning. Even though it was the Studio Ghibli styled memes that basically took the trophy for the day.

Read the full blog here. Perhaps spurred by the reception of 4.0 ImageGen, Midjourney also released a new image model, V7, for the first time in a year.

4o prompt: Give me an image that illustrates photosynthesis with detailed annotations.(on demand educational illustration is (almost) here!)

mid journey prompt v7: Painting in the style of Frans Hals, a happy, beautiful French maid woman holding and drinking a glass of white wine.

[AI + Commentary] 📝🤖📰

[I]: 🎻On LLM Fine Tuning

Andrew Ng shares insights on fine-tuning language models, he discusses the balance between its utility and the effectiveness of simpler prompting techniques. He suggests that while fine-tuning is valuable, many teams might achieve comparable results through methods like mega prompts, few-shot prompting, or agentic workflows. The complexity of implementing fine-tuning, including data collection, provider selection, and deployment, warrants its use primarily when prompting falls short.

However, Ng also outlines specific scenarios where fine-tuning proves beneficial. He highlights its ability to improve accuracy in critical applications, particularly when rule-based prompting struggles to achieve the required reliability, such as in complex decision-making. Furthermore, fine-tuning excels at enabling models to learn and replicate a specific communication style, capturing nuanced language patterns that are difficult to define through prompting alone.

Finally, Ng notes that fine-tuning can be a strategic move for reducing latency or cost when scaling applications that initially relied on large, slower, or more expensive models. By fine-tuning smaller models on data generated by larger ones (model distillation), teams can achieve similar performance with greater efficiency.

[II]: ♟️Strategy Value of Hype

Sangeet Paul Choudary argues in this insightful piece that in today's rapidly evolving landscape, particularly with emerging technologies like AI, traditional "moats" of competitive advantage are becoming less effective. Drawing a fascinating parallel with Renaissance-era Florence, Choudary highlights how the city, lacking military might, strategically invested in art, philosophy, and diplomacy to cultivate a reputation as a vital cultural center, making it too valuable to attack. This "strategic narrative construction," he contends, is akin to modern "hype."

Choudary posits that hype, much like Florence's carefully crafted image, serves as a powerful coordination mechanism, especially in fragmented and uncertain environments. It can create a focal point, instill urgency, offer a moral reframing, attract an ecosystem of collaborators, reshape the perceived risks and rewards, and even establish new standards. He illustrates this with Tesla's success in the electric vehicle market, where a compelling narrative and infrastructure outpaced traditional institutional coordination efforts.

However, Choudary cautions that not all hype is created equal. While "strategic coordination hype" can drive long-term value creation by aligning actors towards a common goal, "temporal arbitrage hype" focuses on short-term gains and can damage trust. Ultimately, the author suggests that in an era where progress relies on the alignment of diverse actors, understanding and strategically leveraging narrative hype is increasingly crucial, even if it comes with inherent risks and the potential for imbalance.

[III]: 💡Future House: Meet the Humans Building AI Scientists

In this comprehensive piece, folks from Asimov Press interviewed the cofounders of Future House. FutureHouse, a San Francisco-based nonprofit, is dedicated to building semi-autonomous AIs to accelerate scientific discovery. I have written about their work extensively on my blog. They have created a suite of "crow"-themed tools like ChemCrow and WikiCrow designed to automate research tasks, from designing chemical reactions to summarizing complex scientific information. The ultimate goal is to develop AI agents capable of independent scientific breakthroughs by streamlining access to and analysis of scientific literature.

According to the article, FutureHouse co-founders Sam Rodriques and Andrew White are tackling the complex challenge of imbuing AI with the cognitive abilities necessary for scientific reasoning. Their work highlights the critical need for more data on how humans conduct scientific research, paving the way for AI to assist and eventually lead in scientific exploration.

[IV]: 📱Amasad on the Future of SaaS

Amasad [CEO of Replit] suggests SaaS founders to go against Silicon Valley's dogma w/ "focus" and build Chinese style tech companies - go broad, build platforms not point solutions. The SaaS market is getting brutally reshaped by AI. Every day Amjad sees people using Replit to replace $15k-100k software products. Vertical SaaS companies are on death watch. If your product solves one specific problem, it's already possible to clone it with today's AI. Tomorrow's tools will make it even easier. Horizontal platforms with strong ecosystems - think Rippling as your "system of record" or Salesforce with its massive developer network are more likely to survive. These aren't getting replaced by a weekend project. He predicts that generative capabilities and larger pieces of software will become more important.

[V]: 💻The Age of Abundance

In a recent essay titled "The Age Of Abundance," Y Combinator partner and co-founder of Monzo and GoCardless, Tom Blomfield, explores the rapidly accelerating advancements in AI in software development. Blomfield counters the argument that LLM will never be capable of writing production-grade code by highlighting the astonishing progress made in the last two years with tools like Claude 3.5 Sonnet and Gemini 2.5 Pro. Drawing from his own experience rebuilding his blog and creating RecipeNinja.ai using AI, Blomfield asserts that these tools are already providing significant productivity gains, making him "10x more productive." He envisions a near future where AI agents autonomously manage entire software development teams.

Blomfield also addresses the idea that Jevons' Paradox – where reduced cost leads to increased consumption – will save software engineers. While he agrees that the demand for software will likely increase, he argues that the supply from rapidly improving AI will meet and potentially exceed this demand, diminishing the need for human coders. He uses the analogy of self-driving cars being safer than human drivers to illustrate a tipping point where AI demonstrably outperforms humans in knowledge work. Blomfield suggests that software engineering is just the beginning, with similar transformations expected in fields like law and medicine.

Ultimately, Blomfield presents a future of intellectual abundance where high-quality knowledge work becomes incredibly affordable and accessible. He anticipates a rise in indie hackers leveraging AI tools but also expresses concern about potential societal disruption and the unequal distribution of benefits. While optimistic about the long-term potential for technological progress to improve lives, Blomfield urges professionals to quickly familiarize themselves with these new AI tools to navigate the changes ahead.

[X] 🎙 Podcast on AI and GenAI

(Additional) podcast episodes I listened to over the past few weeks:

Please share this newsletter with your friends and network if you found it informative!