My Other Publications:

Around the Web Issue 33: Artificializing Intelligence

Video Essays: The Desire Domino Effect, Aristotle on Modern Science, and The First Influencers.

Podcast

Note: this was AI generated with NotebookLM, read the actual newsletter and source materials, as applicable, for grounding.

In this newsletter

[LLM/AI for Science et al] 🤖 🦠 🧬

[I]: 🧬The Role of Natural Language in Representing Biology

In this essay, Sam Rodriques highlights the enthusiasm surrounding “foundation models” in the AI-for-biology community and contrasts it with the complexity of real biological discoveries. He points to recent studies on chromatin remodeling, tumor immune evasion, and sleep regulation—none of which, he argues, could readily emerge from current large-scale, structured models. The underlying issue, Rodriques explains, is that biology is often too messy for the highly structured inputs and outputs that most machine learning models require, meaning that each new insight might demand an entirely new representation space.

Rodriques then proposes that natural language is a vital, unavoidable tool for exploring and sharing new discoveries in biology. While structured approaches like protein, DNA, or transcriptomics models can be valuable, they are inherently limited in expressing the full diversity of biological phenomena. In contrast, language can capture unstructured, complex ideas making it indispensable for the next wave of biological inquiry. The history of biology has always relied on “tools from nature,” and it appears that natural language may be one such tool that will guide us toward a deeper understanding of life’s intricacies.

[II]: 🐤Aviary: Training Language Agents on Scientific Tasks

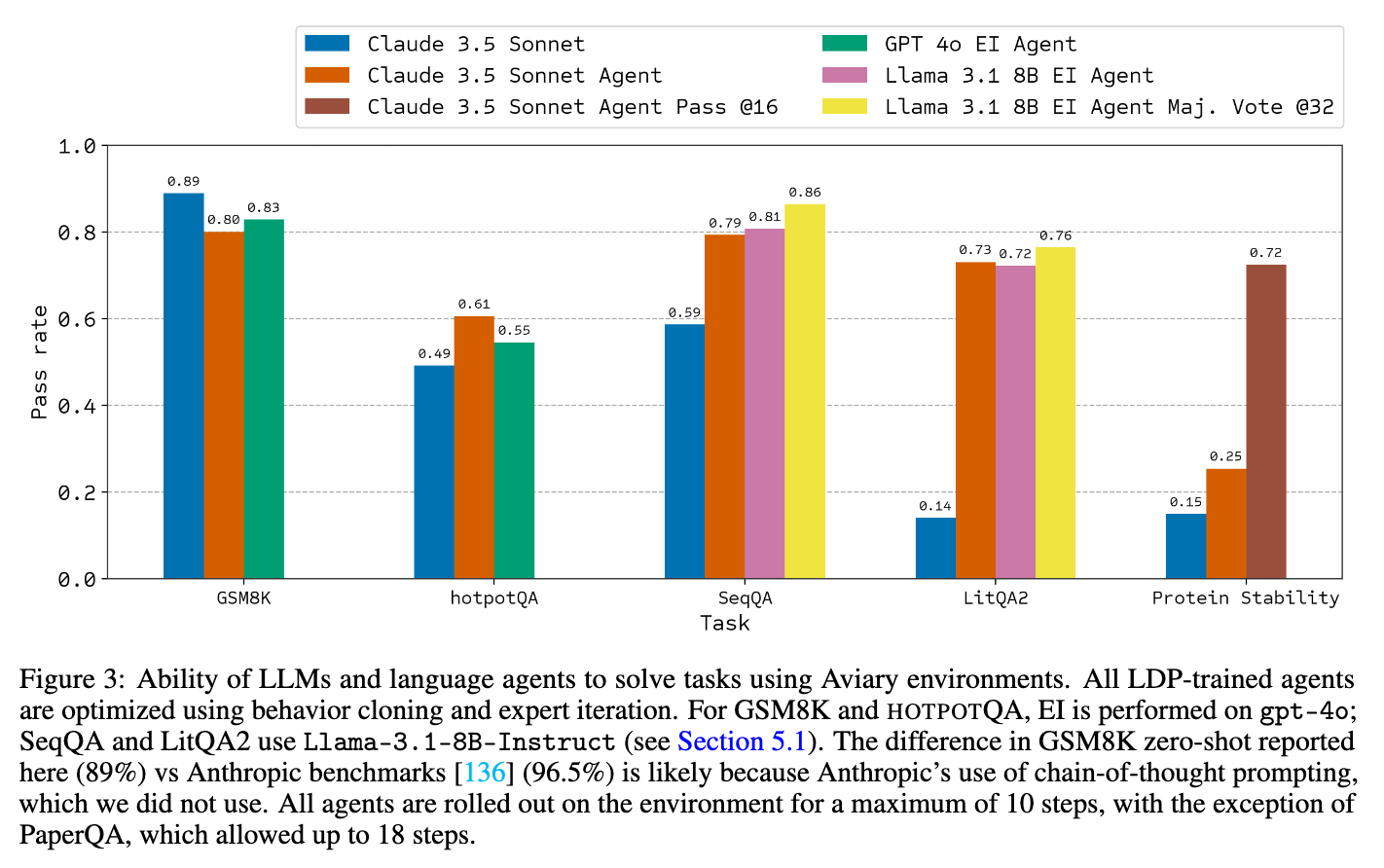

This paper introduces Aviary, a gymnasium for training and evaluating language agents using five unique environments, including three scientifically challenging ones. The work demonstrates that non-frontier large language models (LLMs) can be trained to match or exceed the performance of state-of-the-art models and humans on complex scientific tasks at significantly lower computational costs.

The authors formalize the behavior of language agents as solving language decision processes (LDPs), a variant of partially observable Markov decision processes (POMDPs) grounded in natural language actions and observations. Agents are represented as stochastic computation graphs, facilitating modular optimization of components like LLM weights, prompts, and memory. The Aviary framework implements five environments, including DNA construct manipulation, scientific literature question answering, and protein stability engineering, which require multi-step reasoning and tool interactions.

Training methods combine behavior cloning (initial supervised fine-tuning on high-quality trajectories) with expert iteration (iterative fine-tuning using filtered, agent-generated data). These approaches enable smaller, open-source models, such as Llama-3.1-8B-Instruct, to achieve parity with larger frontier models. Additionally, inference-time strategies like majority voting are employed to improve performance further. The trained agents exceed human and frontier LLM performance in benchmarks like SeqQA and LitQA2, emphasizing their cost-effectiveness and scalability.

Github repo for Aviary and LDP.

[AI/LLM Engineering] 🤖🖥⚙

[I]: 💻Gemini 2.0 for Developers

Google’s next wave of AI innovation, Gemini 2.0, offers developers significantly enhanced capabilities to build interactive, multimodal applications that span text, images, audio, and video— all through a single API. Gemini 2.0 Flash Experimental is faster, more powerful, and now includes native tool use, enabling developers to integrate Google Search or code execution directly into their workflows. Alongside these features, the new Multimodal Live API supports real-time audio and video streaming, setting the stage for more dynamic, collaborative experiences. See Gemini 2.0 cookbook.

Beyond the technical enhancements, Gemini 2.0 marks a new era in AI-powered code assistance. Google introduced Jules, a code agent designed to handle tedious coding tasks—like bug fixing—so developers can focus on what matters. Integrated with GitHub and powered by Gemini 2.0, Jules can generate and refine solutions autonomously. Additionally, Colab’s data science agent now taps into Gemini 2.0, allowing users to simply describe what they want analyzed and watch as an interactive notebook is automatically created. Together, these innovations empower developers to build faster, more intuitively, and with greater creative freedom than ever before.

[II]: 🖱Hugging Face smolagents.

In this video tutorial, Sam Witteveen explores Hugging Face's new agent framework, "smolagents," a library designed for building agents with a focus on simplicity and code-based communication. Witteveen highlights the framework's ability to leverage open-source models from the Hugging Face Hub. He delves into the concept of "code agents," which utilize code for internal communication and action execution, offering a sandboxed environment for enhanced safety. Witteveen provides a practical demonstration of building and running agents, showcasing the use of tools, handling proprietary models like OpenAI's GPT-4, and customizing system prompts.

The video also touches upon the framework's support for tool-calling agents, similar to traditional agent frameworks, and the ability to define custom tools with specific inputs and outputs. There is also the potential of pushing these custom tools to the Hugging Face Hub, fostering a collaborative environment for agent development as we move into the future. The framework appears to have some flexibility between agency and control, perhaps a middle ground between excessive agency (like BabyAGI or AutoGPT) and restricted agency (like LangGraph). While I haven’t used smolagents, and I feel more comfortable sticking to LangGraph going forward.

[III]: 👨💻Anthropic AI: Building Effective Agents

In this essay by Anthropic, the authors emphasize that the most effective LLM agents are built using simple, composable patterns rather than complex frameworks. They note that while agentic systems can be immensely powerful, developers should first explore the simplest solution—often just a single LLM call with retrieval and in-context examples—before adding layers of complexity.

A key distinction is drawn between “workflows,” which use predefined code paths to orchestrate LLM and tool usage, and more autonomous “agents” that dynamically direct their own processes. They outline five workflow patterns that increase flexibility in measured steps: Prompt Chaining (breaking tasks into sequential subtasks), Routing (classifying an input and sending it to a specialized follow-up), Parallelization (handling multiple task components at once, sometimes aggregating diverse outputs), Orchestrator-Workers (a central LLM that dynamically breaks down tasks and assigns them to specialized “workers”), and Evaluator-Optimizer (one LLM generating a response while another iteratively refines it based on feedback).

Agents, on the other hand, can operate independently for extended periods, relying on feedback from the environment at each step, and potentially pausing to gather human input. They handle open-ended problems that cannot be easily hardcoded into fixed paths, making them a good option for more unpredictable or complex tasks, such as coding assistance or specialized customer support scenarios.

Throughout, the essay underscores the importance of thoughtful tool design and prompts, cautioning that frameworks can obscure key details and lead to errors. Developers are urged to keep things transparent and straightforward, building trust by focusing on reliability, easy-to-read interfaces, and meaningful user control—even as agentic capabilities become more advanced. One last comment I will make pertains to Anthropic’s framework for thinking about agents, and how it's different from that of LangChain’s (LangGraph). For example, Anthropic places great emphasis on the autonomous capabilities of AI agents, while LangChain is more focused on the decision-making process within predefined application structures. Some of anthropic’s workflows turn out to be LangChain’s agents.

[AI X Industry + Products] 🤖🖥👨🏿💻

[I]: 🌲OpenAI: 12 days of Shipmas.

In my last issue of The Epsilon Pulse (December 2024 Issue), I wrote up to the 4th day of the 12 days of OpenAI, we will take it up from there.

Day 5: ChatGPT integration with Apple Intelligence was announced.

Day 6: Advanced Voice Mode gained visual capabilities, allowing ChatGPT to see through device cameras.

Day 7: Projects feature was introduced to help users organize their ChatGPT conversations. It gives the vibe of NotebookLM.

Day 8: ChatGPT Search was made available to all users, including free tier.

Day 9: OpenAI released o1 model to API and introduced new developer tools. A new parameter called reasoning effort is introduced, with improved reasoning, vision capabilities, function calling, structured output, and more.

Day 10: A phone number (1-800-CHATGPT) was launched for accessing ChatGPT via voice calls.

Day 11: Enhanced app integrations for ChatGPT were unveiled: support for Notion, voice mode + work with apps. Voice mode + work with apps is pretty nice.

Day 12: OpenAI previewed their upcoming o3 and o3-mini models, focusing on improved reasoning capabilities. o3 >> o1. Incredible at programming, math, and in PhD-level science.

[II]: 🔍Google’s Gemini 2.0

Google’s latest AI release, Gemini 2.0, represents a major step forward in its mission to organize and use information to help people. As Sundar Pichai explains, Gemini 2.0 brings enhanced multimodality—handling text, images, audio, video, and code more naturally—and introduces “agentic” abilities: understanding context, taking multi-step actions, and even using tools like Search on a user’s behalf. Building on its previous models, Gemini 2.0 Flash offers faster, more capable performance and adds new capabilities such as native image generation, steerable text-to-speech, and direct access to tools. Case in point: “2.0 Flash even outperforms 1.5 Pro on key benchmarks, at twice the speed.”

All these align with Google’s vision for a universal AI assistant that can provide deep research, integrate directly into Search and other core products, and assist with complex real-world tasks. Alongside Gemini 2.0, Google is experimenting with agentic prototypes, which are highlighted in some details in Mr Pichai announcement, and also below.

[III]: 🔍Gemini Deep Research

Gemini, the AI assistant app, has been updated with two new features amongst others: Deep Research and Gemini 2.0 Flash and Advanced. Deep Research (with 1.5 Pro) uses AI to conduct research for you, providing a very comprehensive report with key findings and links to original sources. I have played with Deep Research and it is not nothing. Based on my internal use cases it beats Perplexity Pro, and Perplexity Pro is already too good.

[IV]: 📽 Project Astra: Universal AI assistant

In this demo, Google showcases a prototype AI assistant with impressive capabilities. This AI can remember door codes, provide laundry instructions, offer information about local businesses and landmarks, assist with pronunciation, identify sculptures and discuss the artist's style, provide gardening advice, give book recommendations, navigate public transportation, provide weather updates, identify parks, and even remember door codes. The demo demonstrates the potential of Project Astra to become a powerful tool for everyday life, seamlessly integrating with devices like smartphones and AR glasses to provide information and assistance on the go.

[V]: 🫡 Project Mariner:

Project Mariner is an early research prototype built with Gemini 2.0 that explores the future of human-agent interaction, starting with your browser. As a research prototype, it’s able to understand and reason across information in your browser screen, including pixels and web elements like text, code, images and forms, and then uses that information via an experimental Chrome extension to complete tasks for you.

When evaluated against the WebVoyager benchmark, which tests agent performance on end-to-end real world web tasks, Project Mariner achieved a state-of-the-art result of 83.5% working as a single agent setup.

This prototype is in line with Anthropic’s computer use.

See demo for Project Mariner.

[VI]: Replit Agent: What are people building?

I ‘looked into’ replit agent over the winter break, and it looks like a new way of building apps is getting shaped up, however, I sense some over-hyping or probably just folks with misplaced expectations. Some interesting use cases: custom health dashboard, fasting app, 2024 X Receipts, bespoke landing pages, and habit tracker. Here is an official tutorial on building a file conversion app with replit. They have also introduced a replit assistant for making iterative changes/edits to MVP. Here is some updated information on agent usage and billing. I really liked that they do have a way to connect Replit and Cursor for Simple, Fast Deployments.

I am still not convinced about buying the subscription just yet partly because of the quite negative feedback in the comment section on YouTube, but mostly because I don’t think I will have the time to invest in playing with it over the next month. I will consider reporting back once I get the chance to play with it myself. In any case, if you are wondering about getting started with Replit, here is a nice tutorial to watch.

[AI + Commentary] 📝🤖📰

[I]: 🤯o3 Model: Update All You Think You Know About AI

OpenAI’s year-end announcement of the o3 reasoning model was nothing short of staggering. In just fifteen days since releasing o1, they unveiled o3 (and its coding-oriented “mini” version) that outstrips all known AI benchmarks in math, coding, science, and complex problem-solving. This essay discusses the incredible implication of this model. Scores on tests like Codeforces, GPQA Diamond, and ARC-AGI soared to unprecedented levels—effortlessly surpassing previous bests by margins as large as 20% (or even 1200% on tougher tasks), pushing many observers to call it a genuine breakthrough. While OpenAI isn’t giving immediate access to o3, they promise o3-mini’s public release in Q1 2025, followed by the full o3 model.

(I covered the ARC challenge on this newsletter back in June 2024.)

With this leap, what was once only dreamed of in AI labs is now knocking at the door. The ability to ace graduate-level “Google-proof” questions, consistently solve advanced math, and breeze through coding tasks previously thought insurmountable raises big questions about what comes next—both in AI research and in our day-to-day experiences. Although some experts caution against labeling o3 as “AGI,” the model’s meteoric progress stands as a clear message: we’re entering new territory, and everything we thought we knew about AI capabilities needs a serious update, argues the author of the essay.

[II]: 👴AI Productivity Gains Paradox.

In this very fine essay, Sangeet Paul Choudary explores the counterintuitive dynamics of productivity gains and profitability in the age of generative AI. Drawing on Charlie Munger’s insights, he illustrates how technological advancements, while boosting efficiency, often fail to benefit the adopters. Munger's anecdote about textile mills and new looms underlines a recurring pattern: cost reductions enhance customer benefits but erode producer margins. Choudary likens this to companies embracing AI-driven productivity in their core offerings, inadvertently ceding long-term pricing power to AI suppliers, as seen with ChatGPT's o1 Pro subscription hike. The paradox underscores that productivity gains in core value chains often spell doom, while enhancements in complementary areas can reinforce competitive advantages.

Choudary further examines strategies for navigating these shifts, emphasizing the importance of controlling profit pools and leveraging proprietary production advantages. He contrasts the strategic pivots of companies like Netflix, which built original content to escape dependency on suppliers, with Spotify’s ongoing struggle against music labels’ pricing power. The essay challenges businesses to rethink their adoption of generative AI: are they strengthening complementary functions or jeopardizing their core? Choudary's incisive analysis serves as a cautionary tale, urging firms to align AI adoption with sustainable profit strategies rather than chasing short-term efficiencies.

[III]: ⚙ Capital will Matter More After AGI

In this intellectually stimulating essay, L. Rudolf L argues that labor-replacing AI will drastically shift the balance of power between human and non-human factors of production. As AI becomes a more general substitute for human labour, physical and financial capital will become increasingly dominant. This economic transition risks making humans less relevant to societal functioning, reducing incentives for governments and institutions to prioritize human welfare while entrenching existing power structures.

Rudolf highlights that the replacement of human labour by AI will magnify the ability of money to convert directly into results, as AI systems can be cloned, vastly outperform human talents, and lack the complex motivations that make humans difficult to hire. At the same time, the leverage that most people derive from their labour—whether in terms of economic power or societal influence—will evaporate. The result may be a static society where ambition, innovation, and social mobility are stifled.

Even with potential measures like universal basic income (UBI), Rudolf warns that AI could consolidate wealth and power in the hands of those who control capital during the AI transition. This scenario could lead to a deeply unequal world, potentially resembling a digital feudal system, with limited opportunities for individuals to break free from their socio-economic origins. The potential lock-in of such disparities poses existential risks for dynamism and human ambition.

The essay concludes with a call to action, urging readers to seize this fleeting "dreamtime" of opportunity before the advent of transformative AI locks societal structures into place. Rudolf stresses the importance of preserving societal dynamism and ambition, cautioning against viewing the future of AI as an inevitable wall of human obsolescence. Instead, he advocates for seeking cracks in the emerging AI-driven order, fostering ambition, and preserving opportunities for disruption and innovation.

[IV]: 🗓 Deeplearning.ai: 2025 AI projections.

Andrew Ng, The Batch: Artificial intelligence in 2025 promises new possibilities for rapid prototyping, creative expression, and broader societal impact, according to AI leaders featured in this newsletter. Andrew Ng shares his excitement about AI-assisted software development, especially for building prototypes that help test ideas quickly, like automating tasks or generating personalized tools for family and work. He encourages everyone to learn continually in this fast-moving field and use AI to bring about real improvements — whether that means printing flash cards, creating financial monitoring tools, or analyzing customer feedback at scale.

Industry experts like Hanno Basse and David Ding envision a world where AI accelerates artistic, cinematic, and musical creativity by handling repetitive tasks, liberating humans to focus on the fun, inventive parts. Joseph Gonzalez, Albert Gu, and Mustafa Suleyman address the next wave of AI progress, from data-efficient models and advanced multimodality to “agentic” systems that can see what we see and act on our behalf. They expect innovations around trust, personalization, and the integration of AI into our daily workflow, effectively moving beyond chat interfaces into broader AI collaboration with human users.

Audrey Tang advocates for AI that unites rather than divides, calling for more inclusive algorithms and governance structures that strengthen empathy and democracy. Designing AI with prosocial values at its core — for instance, systems that highlight “bridging content” and incorporate real community needs — can foster healthier online spaces and elevate public discourse. Audrey Tang’s take is the one I find particularly exciting here, partly because this is in one of the places where the incentives to do the right thing doesn’t appear to be in place as such it is not frequently talked about by folks who can effect these changes. Quote: “ For too long, the algorithms that drive social media have functioned like strip-mining machines, extracting attention while eroding trust and social cohesion. What remains are depleted online spaces, where empathy struggles to take root and collective problem-solving finds no fertile ground. AI can — and should — help us transcend these entrenched divides.”

[V] 🎙 Podcast on AI and GenAI

(Additional) podcast episodes I listened to over the past few weeks

Please share this newsletter with your friends and network if you found it informative!