Note: I am currently working on an AI project mostly in the science space with a friend, and we are searching for a technical partner with full-stack software engineering expertise. Please reach out to me via obifarin@yahoo.com or simply reply to this newsletter so we can schedule a time to chat. Ideally, we are looking for a candidate based in the United States.

My Other Publications:

Around the Web Issue #31: Exercise: The Best Medication

AI generated Podcast for ε Pulse: Issue #18

I recently experimented with Google’s NotebookLM audio generation/podcast feature, which I covered in the last issue of The Pulse.

The goal was to convert my newsletter, which can sometimes be long and, at times, perhaps a bit dry for folks who are not really into AI, into something more engaging. While it’s not perfect due to the newsletter’s format, it comes quite close. Given the structure of the newsletter, the result is simply impressive. However, as expected, it doesn’t capture every single story featured and makes a few mistakes. Here is the pod:

In this newsletter:

👨💻

Journey to Superhuman Performance on Scientific Tasks

LangGraph Templates

Orchestrating a Story Writing App with AI agents.

Prompt Management for LLM Apps

🏭

Salesforce Unleashes its First AI Agents

YouTube will use AI to Generate Videos

Meta Connect 2024: Llama 3.2, etc

OpenAI updates: o1, Realtime API, Canvas et al

📝

o1: A New Paradigm in Foundation Models

The AI Dilemma: Navigating the Road Ahead

Rapid Adoption of Generative AI

Sam Altman on The Intelligence Age

[AI/LLM Engineering] 🤖🖥⚙

[I]: 🥼 Journey to Superhuman Performance on Scientific Tasks

In the last issue of Pulse, I wrote about a paper from Future House: “PaperQA2: Language Agent Achieve Superhuman Synthesis of Scientific Knowledge.” Their advanced RAG has demonstrated capabilities surpassing those of human experts in several key areas. providing responses to complex scientific inquiries, composing comprehensive literature reviews, and identifying inconsistencies within published research.

They recently published a detailed engineering companion to the paper. In it they wrote about their systems decisions. In brief, they used an agentic RAG system:

“Differing needs in RAG applications can warrant a subset of these features being used, and rather than having a user make a choice for each application, an “agent” model can be used to automatically select and utilize features.”

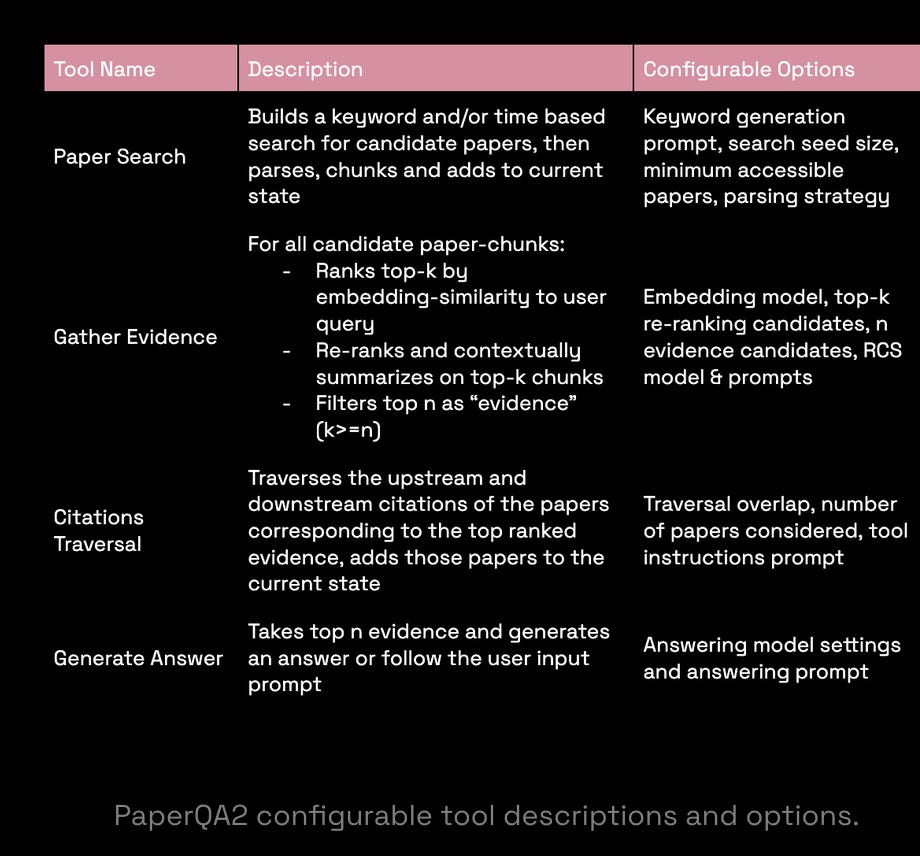

Here is PaperQA2 configurable tool descriptions and options.

Another important component of the agentic system is the LLM re-ranking, contextual summarization (RCS):

“Using the user query, citation information, and the chunk content, a prompt is formulated that asks the LLM to output both a relevance evaluation and summarization of each chunk in the context of the query. The LLM is prompted to score the relevance of the chunk (between 1 and 10) along with providing its summary (<= 300 words). The model’s output score is then used to re-rank the summaries before answering.”

This approach improves accuracy and reduces token usage by re-ranking and summarizing document chunks before feeding them to the final answer prompt. This allows the LLM to reason more effectively. They also discussed details about their citation transversal, and better parsing algorithms in the engineering blog. It’s quite an upgrade!

[II]: 👨💻LangGraph Templates

LangGraph seems to be a recurring theme in this session of the newsletter. This is clearly because it is the agentic framework I have invested the most time playing with, and I like it. I have tried LangGraph Engineer a bit, and it sort of works, perhaps orthogonal (and hopefully complementary (?)) to the product is the recently launched LangGraph Templates, a new feature that makes it easier to get started building agentic applications. A friendly reminder: LangGraph is a low-level, highly controllable agentic framework, and the templates are reference architectures built on top of it. There are four templates available:

Empty project: Start from scratch.

Data enrichment template: This template does web research and fills out information about a particular topic.

React agent template: This is a general cognitive architecture that basically calls tools in a loop until it's done.

Retrieval agent: This template uses a vector store to do retrieval over documents of your choosing.

[III]: 🔖 Orchestrating a Story Writing App with AI agents.

One of the more complicated AI agents I have seen built in a relatively short YouTube video. Briefly, the agent uses a graph structure to represent the story. Each node in the graph represents a chapter in the story. The graph also stores the relationships between chapters, such as which chapter is a parent of another chapter. To write a new chapter, the agent uses a number of different LLMs, sonnet 3.5, GPT-4o. The LLMs are used to brainstorm ideas for the chapter, outline the chapter, and write the chapter text. Users can edit as they deem fit. The agent also uses a number of helper functions to summarize the story up to the current chapter and to generate titles for chapters, etc. I am not sure there is something that can’t be built today around knowledge work, bar cost.

[IV] 👨💻Prompt Management for LLM Apps

If you have ever attempted to write any LLM-based app, then you probably don’t need a pitch on how critical prompt management is. I have recently started to get more hands-on with this end of things, and LangSmith was my go-to. I am sure there are many other platforms out there, but I personally don’t have the bandwidth to try all of these options. However, Langsmith prompt management is pretty cool: it allows you to commit your prompts, manage prompts programmatically, pull your prompts neatly from the prompt hub instead of littering all of your code with text, it also links traces logged with the prompt, etc. Here is a how-to guide. One downside to separating your prompt in this manner is that you potentially miss out on using software agents like Cursor, to improve your prompt within your code base.

[AI X Industry + Products] 🤖🖥👨🏿💻

[I]: 🛒 Salesforce Unleashes its First AI Agents

Out of the box, Salesforce is offering a handful of agents that can handle tasks such as sales rep, service agent, personal shopper and sales coach.

Agentforce also includes a low-code option to build additional agents and options to bring in agents and models from others.

Salesforce says Agentforce starts at $2 per conversation, with volume discounts for larger customers.

Video Link

Salesforce’s New AI Strategy Acknowledges That AI Will Take Jobs.

[II]: 📹YouTube will use AI to Generate Ideas, Titles, and even Full Videos

Not surprised at all by this one. Google still appears to me to be the company that might benefit the most from this AI boom, they have their legs in so many ponds that it’s just mind blowing to think about. Link to read and watch.

[III]: 🔌Meta Connect 2024

Interesting products and updates at Meta Connect.

Meta Quest 3S mixed reality headset, Llama 3.2 - multimodal (image and text), Meta AI - voice, automatic video dubbing on reels (starting with Spanish and English), Orion AI glasses, etc.

See more on Meta Llama 3.2: A Deep Dive into Vision Capabilities.

[IV]: 🫢 OpenAI updates: o1, Realtime API, Canvas et al

Explore the latest announcements from OpenAI's DevDay! This video dives into four significant updates: Real-time API for natural speech conversations, Vision Fine-Tuning for enhanced visual capabilities, Prompt Caching for cost efficiency, and Model Distillation for creating efficient models with larger outputs. Learn how these innovations can revolutionize your applications and improve performance.

Link to OpenAI Dev Day in 5 minutes.

They just recently launched Canvas, a direct response to Anthropic’s Artifact, a new interface for working with ChatGPT on writing and coding projects that go beyond simple chat. You can watch the demo here. Here is the full blog. This feature looks very powerful. They took Artifact and made it better.

Also see: Nature’s ‘In awe’: scientists impressed by latest ChatGPT model o1

See more on o1 below.

[AI + Commentary] 📝🤖📰

[I]: ⚓o1: A New Paradigm in Foundation Models

or How o1 works by Theory Ventures. I enjoyed reading the essay here is a brief summary:

OpenAI's o1 model represents a paradigm shift in LLM development, moving beyond simple scaling to introduce training-time reinforcement learning and inference-time search. The approach enhances the model's reasoning capabilities by focusing on two key areas: improved data distribution modeling and advanced search strategies.

O1 refines its data distribution modeling through specialized training on reasoning tasks, including math, logic, and human-rated problems. This process develops a robust understanding of reasoning strategies. Additionally, o1 employs search techniques during inference, allowing for more effective exploration of its knowledge base.

This dual approach has significant implications for LLM applications, they argued. Companies will need to consider "time budgets" for AI features, balancing response time with answer quality. There's also an opportunity to create competitive advantages through domain-specific reasoning datasets.

The success of o1 suggests future LLM development will focus on both improving the underlying model and optimizing knowledge access and application. Theory Ventures also argued that the shift towards more complex systems in training and deployment may widen the gap between open-source and closed-source models.

As LLMs evolve, we can expect increased emphasis on specialized training data, advanced search strategies, and tailored deployment methods, potentially opening new possibilities for AI applications across various domains.

[II]: 👝The AI Dilemma: Navigating the road ahead with Tristan Harris

We don’t talk more about AI safety as we should. Tristan Harris is this masterful presentation, talks about the risks and challenges of AI and how to navigate them.

He argues that AI is much more powerful than previous technologies and that our current governance systems are not equipped to handle it - I doubt any reasonable person will quibble with this point. He compares AI to 21st-century technology crashing down on 16th-century governance.

Here are the key points from the talk:

AI is amplifying human power to an exponential degree.

Social media, which can be seen as a form of AI, has already caused many problems, such as addiction, misinformation, and mental health issues.

The current incentive structure in AI development prioritizes speed and engagement over safety and societal well-being. This could lead to dangerous consequences, such as the spread of deepfakes and autonomous weapons.

We need to upgrade our governance systems to keep pace with the development of AI. This could involve things like requiring safety assessments for AI models, protecting AI whistleblowers.

[III]: ⚗ Rapid Adoption of Generative AI

Very nice paper from The National Bureau of Economic Research (NBER) on the rapid adoption of genAI.

Some key takeaways:

Rapid and widespread adoption: As of August 2024, 39.4% of U.S. adults aged 18-64 reported using generative AI, with 28% using it at work. This adoption rate is faster than historical adoption of personal computers and the internet. (This is probably expected given that there is little to no friction getting started with genAI here)

Frequent work usage: Among employed users, 24.2% used generative AI at least weekly for work, and 10.6% used it daily.

Broad application across occupations: While adoption was highest in computer/math (49.6%) and management (49%) occupations, even 22.1% of "blue collar" workers reported using generative AI at work.

Diverse tasks: At work, generative AI was most commonly used for writing (56.9%), searching for information (49.4%), and obtaining detailed instructions (47.8%). But it was used for a wide variety of tasks across occupations.

Demographic differences: Usage was higher among men, younger workers, those with higher education levels, and in STEM fields. However, the differences were less pronounced than with earlier technologies like PCs.

Potential productivity impact: The researchers estimate that between 0.5% and 3.5% of all work hours are currently assisted by generative AI. This could potentially increase labor productivity by 0.125 to 0.875 percentage points, though this estimate is speculative.

General-purpose technology: The broad adoption across occupations and tasks supports the idea that generative AI is a true general-purpose technology with wide-ranging applications.

Interesting table from the paper to muse over.

[IV] 🧠Sam Altman on The Intelligence Age

In the next couple of decades, we will be able to do things that would have seemed like magic to our grandparents.

This phenomenon is not new, but it will be newly accelerated. People have become dramatically more capable over time; we can already accomplish things now that our predecessors would have believed to be impossible.

…

This may turn out to be the most consequential fact about all of history so far. It is possible that we will have superintelligence in a few thousand days (!); it may take longer, but I’m confident we’ll get there.

How did we get to the doorstep of the next leap in prosperity?

In three words: deep learning worked.

In 15 words: deep learning worked, got predictably better with scale, and we dedicated increasing resources to it.

Link to full essay.

[V] 🎙 Podcast on AI and GenAI

(Additional) podcast episodes I listened to over the past few weeks.